下载安装包并上传到hadoop01服务器

安装包下载地址:

https://www.apache.org/dyn/closer.lua/hbase/2.2.6/hbase-2.2.6-bin.tar.gz

将安装包上传到node01服务器/bigdata/softwares路径下,并进行解压

[hadoop@hadoop01 ~]$ cd /bigdata/soft/

[hadoop@hadoop01 soft]$ tar -zxvf hbase-2.2.6-bin.tar.gz -C /bigdata/install/

[hadoop@hadoop01 softwares]$ cd /bigdata/install/hbase-2.2.6/conf

[hadoop@hadoop01 conf]$ vi hbase-env.sh

export JAVA_HOME=/bigdata/install/jdk1.8.0_141

export HBASE_MANAGES_ZK=false

[hadoop@hadoop01 conf]$ vi hbase-site.xml

<configuration>

<!-- 指定hbase在HDFS上存储的路径 -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://hadoop01:8020/hbase</value>

</property>

<!-- 指定hbase是否分布式运行 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 指定zookeeper的地址,多个用“,”分割 -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop01,hadoop02,hadoop03:2181</value>

</property>

<!--指定hbase管理页面-->

<property>

<name>hbase.master.info.port</name>

<value>60010</value>

</property>

<!-- 在分布式的情况下一定要设置,不然容易出现Hmaster起不来的情况 -->

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

</configuration>

[hadoop@hadoop01 conf]$ vi regionservers

hadoop01

hadoop02

hadoop03

[hadoop@hadoop01 conf]$ vi backup-masters

hadoop03

[hadoop@hadoop01 conf]$ cd /bigdata/install

[hadoop@hadoop01 install]$ xsync hbase-2.2.6/

注意:三台机器均做如下操作

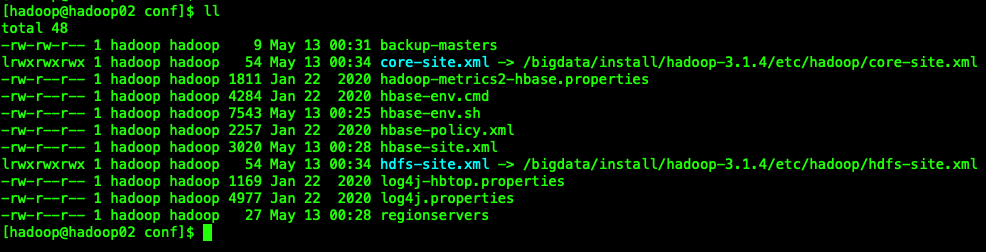

因为HBase集群需要读取hadoop的core-site.xml、hdfs-site.xml的配置文件信息,所以我们三台机器都要执行以下命令,在相应的目录创建这两个配置文件的软连接

ln -s /bigdata/install/hadoop-3.1.4/etc/hadoop/core-site.xml /bigdata/install/hbase-2.2.6/conf/core-site.xml

ln -s /bigdata/install/hadoop-3.1.4/etc/hadoop/hdfs-site.xml /bigdata/install/hbase-2.2.6/conf/hdfs-site.xml

sudo vi /etc/profile

export HBASE_HOME=/bigdata/install/hbase-2.2.6

export PATH=$PATH:$HBASE_HOME/bin

source /etc/profile

start-dfs.sh命令zkServer.sh start命令[hadoop@hadoop01 ~]$ start-hbase.sh

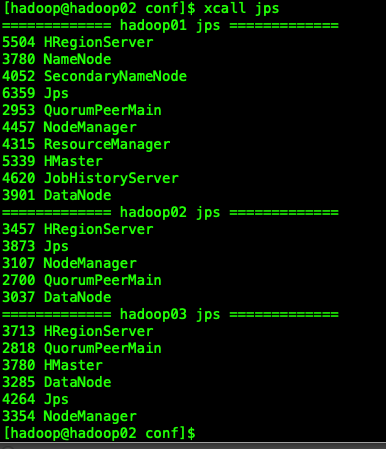

启动完后,jps查看HBase相关进程

hadoop01、hadoop03上有进程HMaster、HRegionServer

hadoop02上有进程HRegionServer

浏览器页面访问

停止HBase集群的正确顺序

[hadoop@hadoop01 ~]$ stop-hbase.sh

原文:https://www.cnblogs.com/tenic/p/14762834.html