一、词频统计:

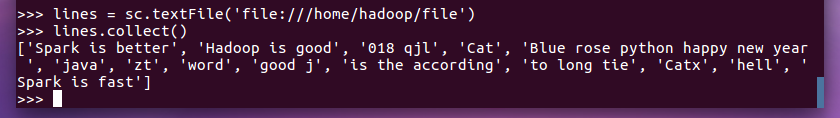

1.读文本文件生成RDD lines

lines = sc.textFile(‘file:///home/hadoop/file‘) lines.collect()

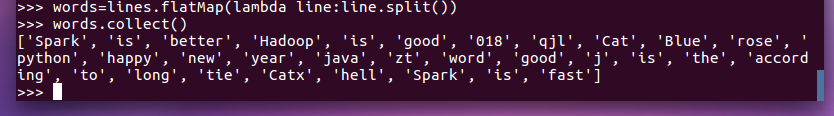

2.将一行一行的文本分割成单词 words flatmap()

words=lines.flatMap(lambda line:line.split()) words.collect()

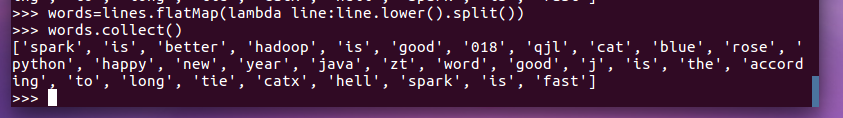

3.全部转换为小写 lower()

words=lines.flatMap(lambda line:line.lower().split()) words.collect()

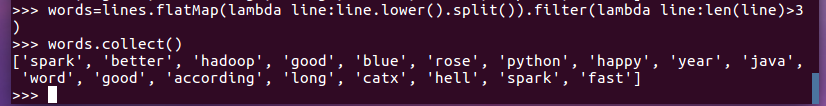

4.去掉长度小于3的单词 filter()

words=lines.flatMap(lambda line:line.lower().split()).filter(lambda line:len(line)>3) words.collect()

5.去掉停用词

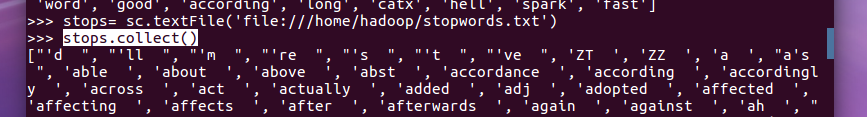

stops= sc.textFile(‘file:///home/hadoop/stopwords.txt‘) stops.collect()

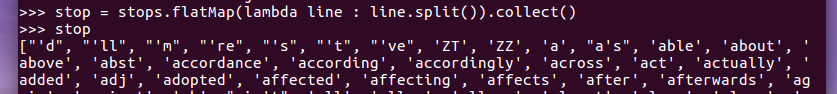

stop = stops.flatMap(lambda line : line.split()).collect() stop

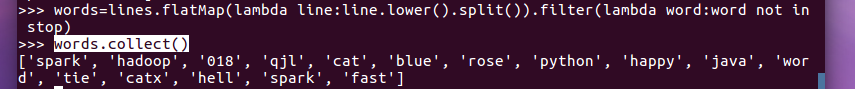

words=lines.flatMap(lambda line:line.lower().split()).filter(lambda word:word not in stop) words.collect()

6.转换成键值对 map()

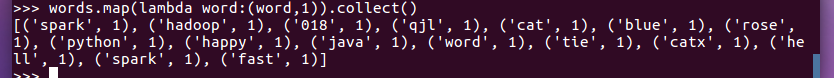

words.map(lambda word:(word,1)).collect()

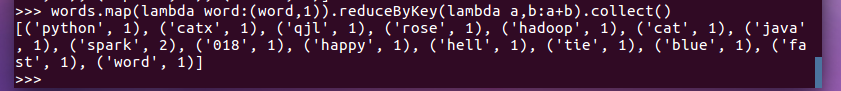

7.统计词频 reduceByKey()

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).collect()

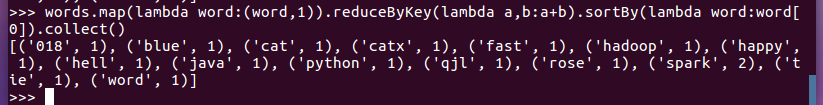

8.按字母顺序排序 sortBy(f)

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).sortBy(lambda word:word[0]).collect()

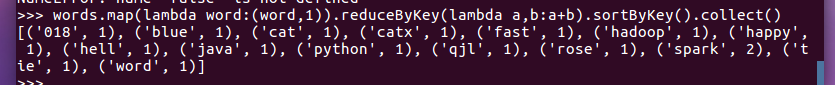

9.按词频排序 sortByKey()

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).sortByKey().collect()

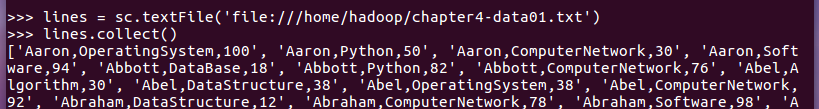

二、学生课程分数案例

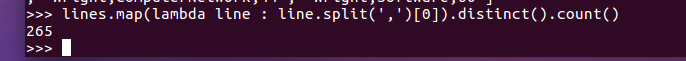

1.总共有多少学生?map(), distinct(), count()

lines.map(lambda line : line.split(‘,‘)[0]).distinct().count()

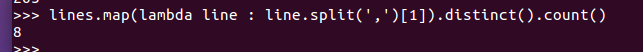

2.开设了多少门课程?

lines.map(lambda line : line.split(‘,‘)[1]).distinct().count()

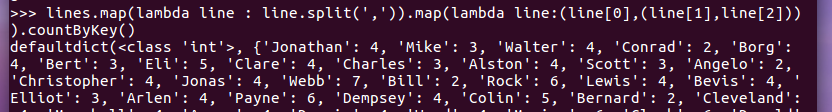

3.每个学生选修了多少门课?map(), countByKey()

lines.map(lambda line : line.split(‘,‘)).map(lambda line:(line[0],(line[1],line[2]))).countByKey()

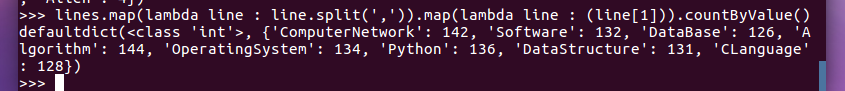

4.每门课程有多少个学生选?map(), countByValue()

lines.map(lambda line : line.split(‘,‘)).map(lambda line : (line[1])).countByValue()

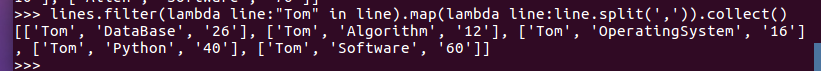

5.Tom选修了几门课?每门课多少分?filter(), map() RDD

lines.filter(lambda line:"Tom" in line).map(lambda line:line.split(‘,‘)).collect()

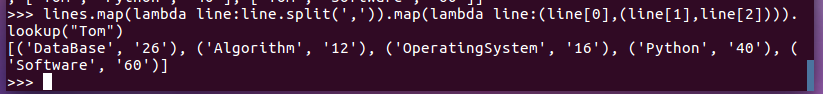

6.Tom选修了几门课?每门课多少分?map(),lookup() list

lines.map(lambda line:line.split(‘,‘)).map(lambda line:(line[0],(line[1],line[2]))).lookup("Tom")

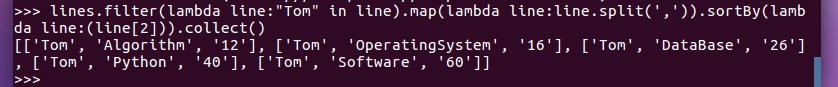

7.Tom的成绩按分数大小排序。filter(), map(), sortBy()

lines.filter(lambda line:"Tom" in line).map(lambda line:line.split(‘,‘)).sortBy(lambda line:(line[2])).collect()

8.Tom的平均分。map(),lookup(),mean()

numpy库下载不了,这题做不了了

原文:https://www.cnblogs.com/wsqjl/p/14674442.html