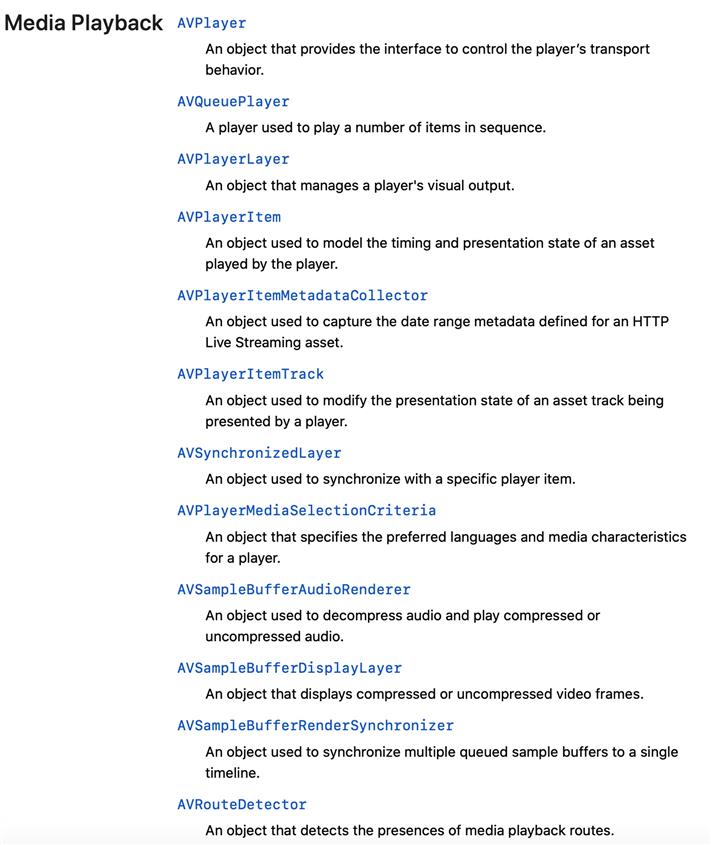

在iOS平台上,AVFoundation.framework共同完成了一系列的任务,包括捕捉、处理、合成、控制、导入和导出视听媒体。

Media Assets, Playback, and Editing:: 媒体资源获取,播放,编辑功能

AirPay2

Cameras and Media Capture

System Audio Interaction

Audio Track Engineering:

Speech Synthesis

AV Video Composition Render Hint

AVAudioSession和使用系统提供的AVPlayer,AVPlayerViewController,可以快速的通过一个指定的url播放视屏func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey : Any]? = nil) -> Bool {

let audioSession = AVAudioSession.sharedInstance()

do {

try audioSession.setCategory(.playback, mode: .moviePlayback)

}

catch {

print("Setting category to AVAudioSessionCategoryPlayback failed.")

}

return true

}

//播放视频.

@IBAction func playVideo(_ sender: UIButton) {

guard let url = URL(string: "https://devstreaming-cdn.apple.com/videos/streaming/examples/bipbop_adv_example_hevc/master.m3u8") else {

return

}

// Create an AVPlayer, passing it the HTTP Live Streaming URL.

let player = AVPlayer(url: url)

// Create a new AVPlayerViewController and pass it a reference to the player.

let controller = AVPlayerViewController()

controller.player = player

// Modally present the player and call the player‘s play() method when complete.

present(controller, animated: true) {

player.play()

}

}

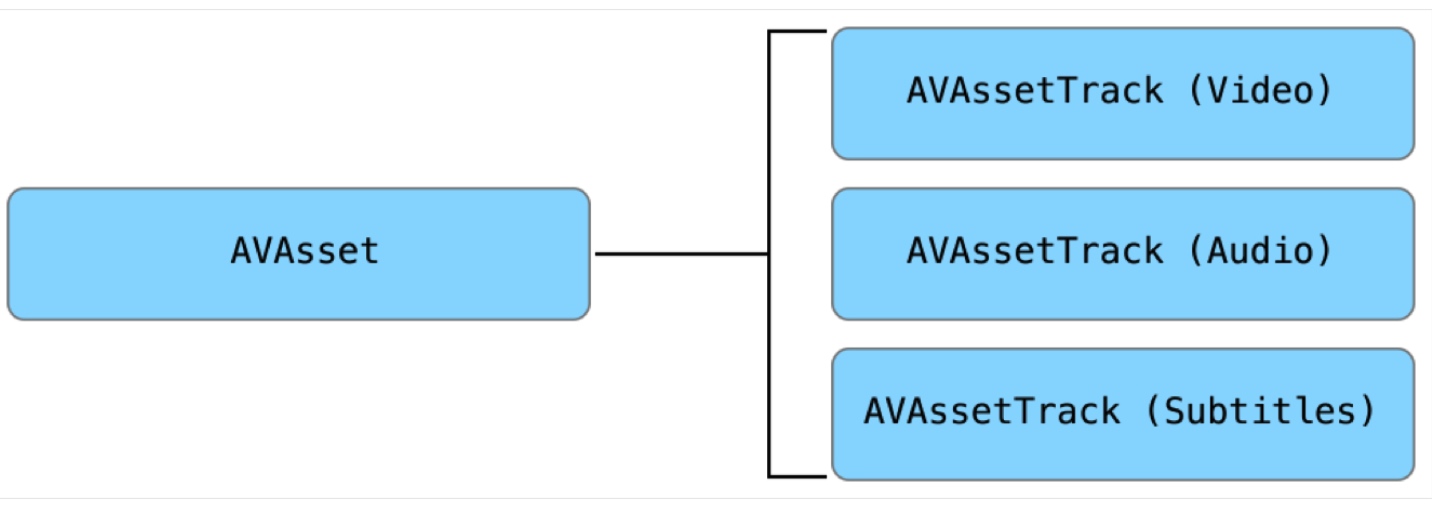

AVAsset的实例可以表示基于本地文件的媒体,例如QuickTime电影或MP3音频文件,还可以表示从远程主机逐步下载或使用HTTP Live Streaming(HLS)流式传输的资产。

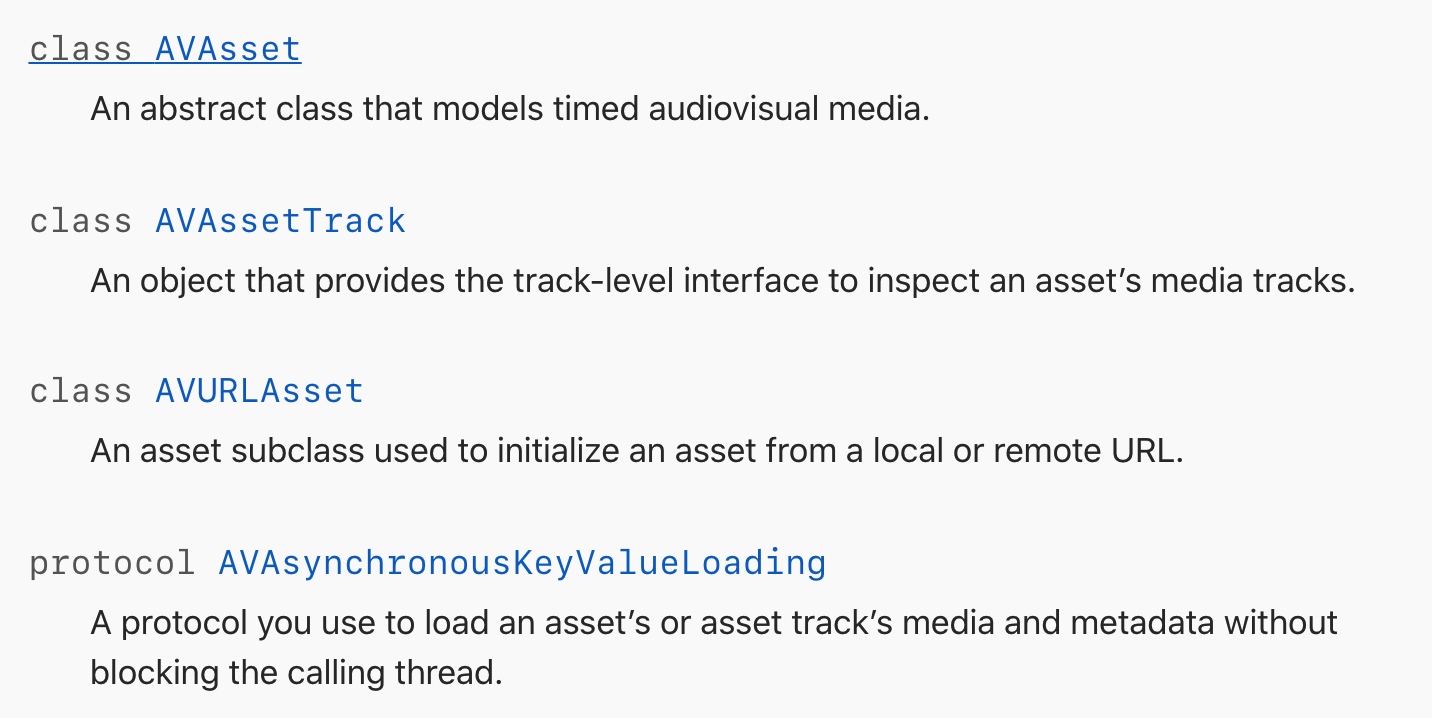

总体来看,它就是一个资源托管对象,常用的类及其功能如下:

检索资源以进行播放或收集有关资源的信息。

playableOffline,配置是否允许脱机访问。通过将资源导出为所需的文件类型来转换电影文件。从AVFoundation提供的AVFileType预设列表中选择您想要的最终视频类型。您将使用该类型配置一个AVAssetExportSession对象,然后该对象管理现有类型的导出过程。

H.264格式的视频,通过指定输出文件的类型,和编码格式,将配置信息写入到Session,由系统API去处理复杂的逻辑。#import <AVFoundation/AVFoundation.h>

//准备输入源,输出目标

AVAsset* hevcAsset = // Your source AVAsset movie in HEVC format //

NSURL* outputURL = // URL of your exported output //

// These settings will encode using H.264.

NSString* preset = AVAssetExportPresetHighestQuality;

AVFileType outFileType = AVFileTypeQuickTimeMovie;

//检查配置信息是否可以执行

[AVAssetExportSession determineCompatibilityOfExportPreset:preset withAsset:hevcAsset outputFileType:outFileType completionHandler:^(BOOL compatible) {

if (!compatible) {

return;

}

}];

//异步导出

AVAssetExportSession* exportSession = [AVAssetExportSession exportSessionWithAsset:hevcAsset presetName:preset];

if (exportSession) {

exportSession.outputFileType = outFileType;

exportSession.outputURL = outputURL;

[exportSession exportAsynchronouslyWithCompletionHandler:^{

// Handle export results.

}];

}

ITU_R BT.2100颜色空间

响应播放状态更改: 下面的例子通过KVO的方式进行监听

let url: URL = // Asset URL

var asset: AVAsset!

var player: AVPlayer!

var playerItem: AVPlayerItem!

// Key-value observing context

private var playerItemContext = 0

let requiredAssetKeys = [

"playable",

"hasProtectedContent"

]

func prepareToPlay() {

// Create the asset to play

asset = AVAsset(url: url)

// Create a new AVPlayerItem with the asset and an

// array of asset keys to be automatically loaded

playerItem = AVPlayerItem(asset: asset,

automaticallyLoadedAssetKeys: requiredAssetKeys)

// Register as an observer of the player item‘s status property

playerItem.addObserver(self,

forKeyPath: #keyPath(AVPlayerItem.status),

options: [.old, .new],

context: &playerItemContext)

// Associate the player item with the player

player = AVPlayer(playerItem: playerItem)

}

[

override func observeValue(forKeyPath keyPath: String?,

of object: Any?,

change: [NSKeyValueChangeKey : Any]?,

context: UnsafeMutableRawPointer?) {

// Only handle observations for the playerItemContext

]() guard context == &playerItemContext else {

super.observeValue(forKeyPath: keyPath,

of: object,

change: change,

context: context)

return

}

if keyPath == #keyPath(AVPlayerItem.status) {

let status: AVPlayerItemStatus

if let statusNumber = change?[.newKey] as? NSNumber {

status = AVPlayerItemStatus(rawValue: statusNumber.intValue)!

} else {

status = .unknown

}

// Switch over status value

switch status {

case .readyToPlay:

// Player item is ready to play.

case .failed:

// Player item failed. See error.

case .unknown:

// Player item is not yet ready.

}

}

}

注册监听的时间回调时间

```Objective-C

var player: AVPlayer!

var playerItem: AVPlayerItem!

var timeObserverToken: Any?

func addPeriodicTimeObserver() {

// Notify every half second

let timeScale = CMTimeScale(NSEC_PER_SEC)

let time = CMTime(seconds: 0.5, preferredTimescale: timeScale)

timeObserverToken = player.addPeriodicTimeObserver(forInterval: time,

queue: .main) {

[weak self] time in

// update player transport UI

}

}

func removePeriodicTimeObserver() {

if let timeObserverToken = timeObserverToken {

player.removeTimeObserver(timeObserverToken)

self.timeObserverToken = nil

}

}

```

固定时间分割点监听

var asset: AVAsset!

var player: AVPlayer!

var playerItem: AVPlayerItem!

var timeObserverToken: Any?

func addBoundaryTimeObserver() {

// Divide the asset‘s duration into quarters.

let interval = CMTimeMultiplyByFloat64(asset.duration, 0.25)

var currentTime = kCMTimeZero

var times = [NSValue]()

// Calculate boundary times

while currentTime < asset.duration {

currentTime = currentTime + interval

times.append(NSValue(time:currentTime))

}

timeObserverToken = player.addBoundaryTimeObserver(forTimes: times,

queue: .main) {

// Update UI

}

}

func removeBoundaryTimeObserver() {

if let timeObserverToken = timeObserverToken {

player.removeTimeObserver(timeObserverToken)

self.timeObserverToken = nil

}

}

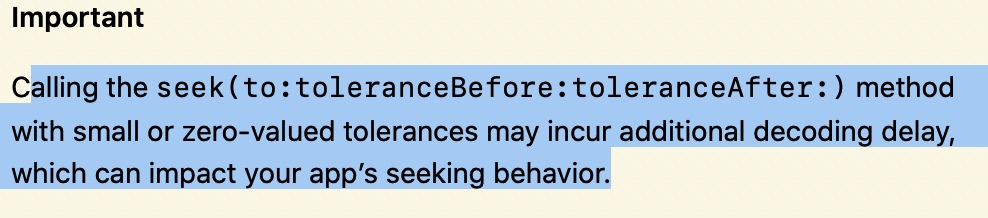

视频播放的进度条移动

// Seek to the 2 minute mark

let time = CMTime(value: 120, timescale: 1)

player.seek(to: time)

// Seek to the first frame at 3:25 mark,时间的精度更高,

let seekTime = CMTime(seconds: 205, preferredTimescale: Int32(NSEC_PER_SEC))

player.seek(to: seekTime, toleranceBefore: kCMTimeZero, toleranceAfter: kCMTimeZero)

注意,提高精度对解码有影响

使用自定义播放器播放音频和视频示例缓冲区。

构建一个音频播放器来播放您的自定义音频数据,还可以选择利用AirPlay2的高级功能

指定长格式音频,配置会话

do {

try AVAudioSession.sharedInstance().setCategory(.playback, mode: .default, policy: .longForm)

} catch {

print("Failed to set audio session route sharing policy: \(error)")

}

注意: .longForm is renamed to .longFormAudio in iOS 13 and above.

管理Playlist

private struct Playlist {

// Items in the playlist.

var items: [SampleBufferItem] = [] //通过源数据来播放

// The current item index, or nil if the player is stopped.

var currentIndex: Int?

}

log输出,信号量控制时序

func insertItem(_ newItem: PlaylistItem, at index: Int) {

playbackSerializer.printLog(component: .player, message: "inserting item at playlist#\(index)")

atomicitySemaphore.wait()

defer { atomicitySemaphore.signal() }

playlist.items.insert(playbackSerializer.sampleBufferItem(playlistItem: newItem, fromOffset: .zero), at: index)

// Adjust the current index, if necessary.

if let currentIndex = playlist.currentIndex, index <= currentIndex {

playlist.currentIndex = currentIndex + 1

}

// Let the current item continue playing.

continueWithCurrentItems()

}

注意修改时的线程安全问题

重启

private func restartWithItems(fromIndex proposedIndex: Int?, atOffset offset: CMTime) {

// Stop the player if there is no current item.

guard let currentIndex = proposedIndex,

(0 ..< playlist.items.count).contains(currentIndex) else { stopCurrentItems(); return }

// Start playing the requested items.

playlist.currentIndex = currentIndex

let playbackItems = Array(playlist.items [currentIndex ..< playlist.items.count])

playbackSerializer.restartQueue(with: playbackItems, atOffset: offset)

}

Schedule Playback: 一旦SampleBufferSerializer对象接收到要播放的项目队列,它将继续执行两项任务:将项目转换为一系列包含音频数据的示例缓冲区,并将缓冲区排队进行渲染。SampleBufferSerializer使AVSampleBufferRenderSynchronizer对象在正确的时间播放音频,并使AVSampleBufferAudioRenderer对象及时呈现排队的音频采样缓冲区以便播放。具体参考Demo。

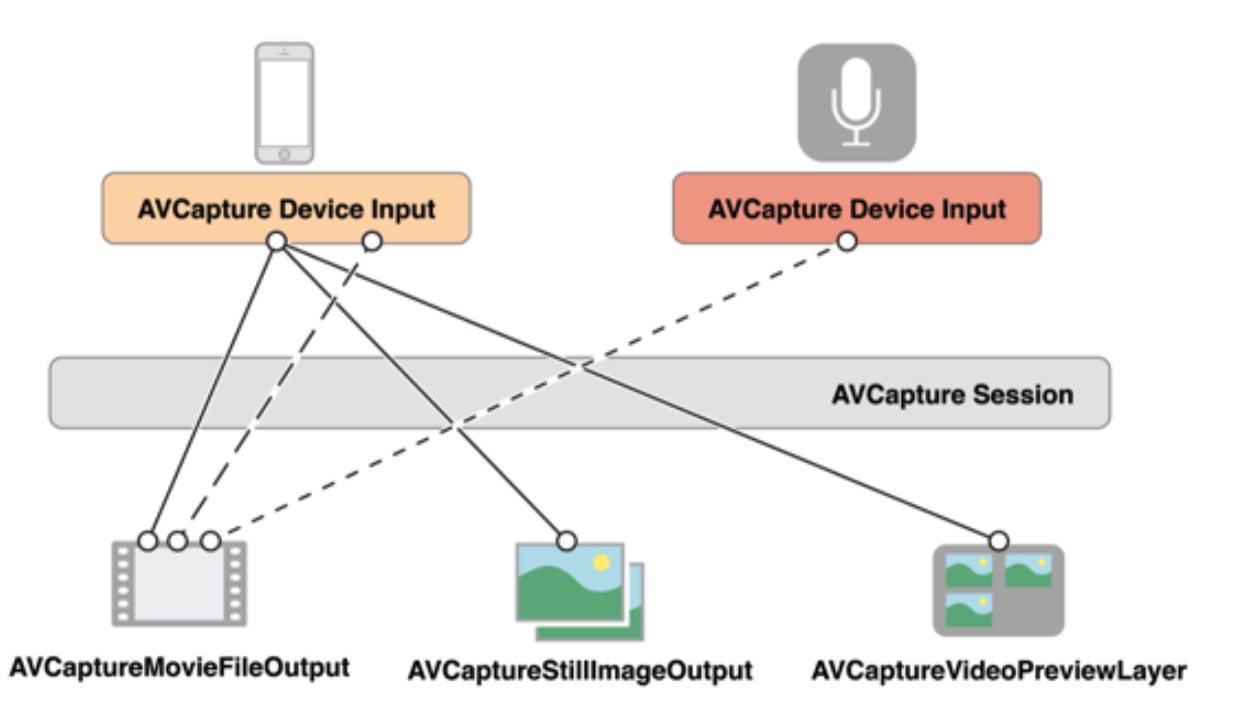

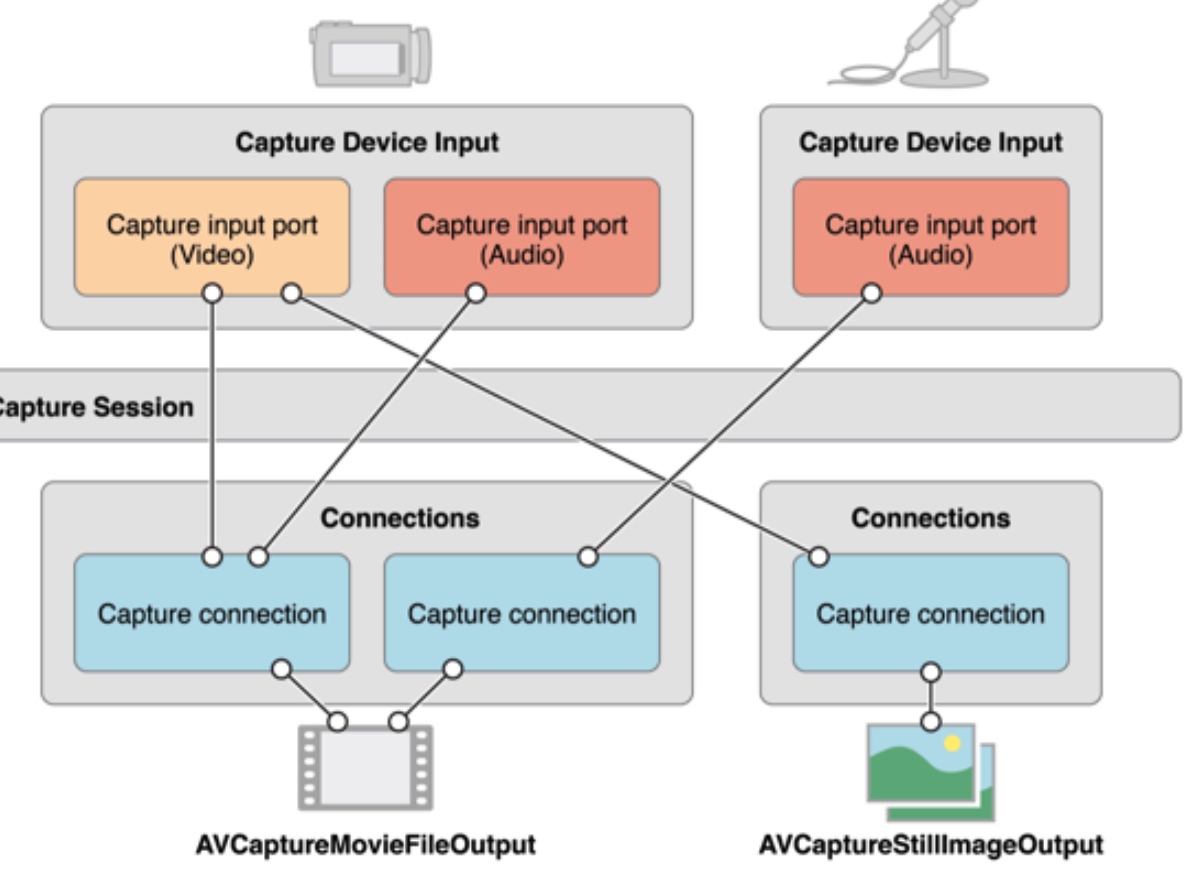

为了管理一个设备,如相机或者麦克风.你可以弄一些对象来描绘输入设备和输出设备.同时用一个AVCaptureSession的实例来协调二者之间的数据流传递.你至少需要如下实例:

上图可以看到通常一个输出对应多个输入,为了方便管理,于是引入了AVCaptureConnection,它可以将多个Input和对应的output进行关联。

请求授权

switch AVCaptureDevice.authorizationStatus(for: .video) {

case .authorized: // The user has previously granted access to the camera.

self.setupCaptureSession()

case .notDetermined: // The user has not yet been asked for camera access.

AVCaptureDevice.requestAccess(for: .video) { granted in

if granted {

self.setupCaptureSession()

}

}

case .denied: // The user has previously denied access.

return

case .restricted: // The user can‘t grant access due to restrictions.

return

}

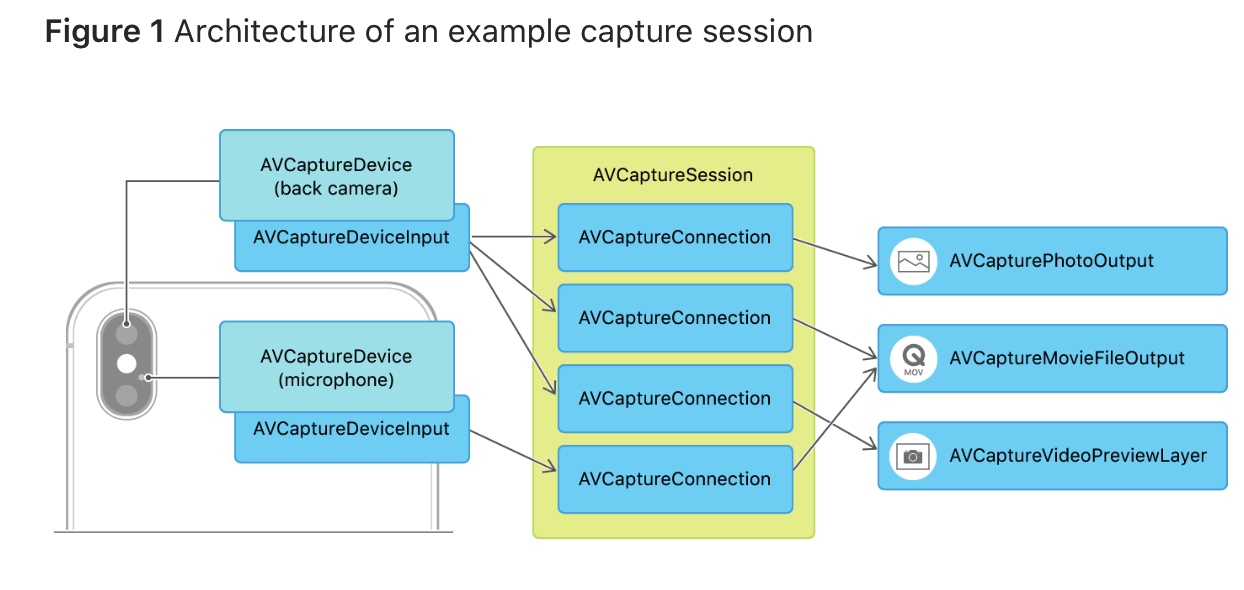

它是iOS和macOS中所有媒体捕获的基础。管理应用程序对操作系统捕获基础设施和捕获设备的独占访问,以及从输入设备到媒体输出的数据流。管理输入和输出流,以及它们直接的连接对象。例如,下图显示了一个捕捉会话,可以捕捉照片和电影,并提供了一个摄像头预览,使用iPhone背摄像头和麦克风。

配置时需要显示的调用beginConfiguration

///配置input

captureSession.beginConfiguration()

let videoDevice = AVCaptureDevice.default(.builtInWideAngleCamera,

for: .video, position: .unspecified)

guard

let videoDeviceInput = try? AVCaptureDeviceInput(device: videoDevice!),

captureSession.canAddInput(videoDeviceInput)

else { return }

captureSession.addInput(videoDeviceInput)

//配置output

let photoOutput = AVCapturePhotoOutput()

guard captureSession.canAddOutput(photoOutput) else { return }

captureSession.sessionPreset = .photo

captureSession.addOutput(photoOutput)

captureSession.commitConfiguration()

class PreviewView: UIView {

override class var layerClass: AnyClass {

return AVCaptureVideoPreviewLayer.self

}

/// Convenience wrapper to get layer as its statically known type.

var videoPreviewLayer: AVCaptureVideoPreviewLayer {

return layer as! AVCaptureVideoPreviewLayer

}

}

///预览层实时的获取session的数据

self.previewView.videoPreviewLayer.session = self.captureSession

注意session的方向:

配置好输入、输出和预览后,调用startRunning()让数据从输入流到输出。

对于一些捕获输出,运行会话就是开始媒体捕获所需的全部内容。例如,如果会话包含AVCaptureVideoDataOutput,则在会话运行后立即开始接收传送的视频帧。

对于其他捕获输出,首先启动会话运行,然后使用capture输出类本身来启动捕获。例如,在摄影应用程序中,运行会话将启用取景器样式的预览,但您使用的是AVCapturePhotoOutput capturePhoto(with:delegate:)捕捉图片的方法。

Demo参考:

ffmpeg/ijkplayer原文:https://www.cnblogs.com/wwoo/p/avfoundation-framework-yue-du-yi.html