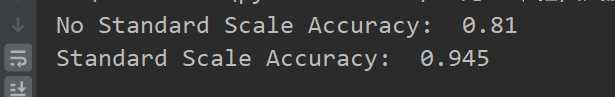

本文通过海伦约会的例子来测试之前写的KDTree的效果,并且探讨了特征是否进行归一化对整个模型的表现的影响。

最后发现在机器学习中,特征归一化确实对模型能提供非常大的帮助。

1 from KDTree import KDTree # 参考实现KDtree的随笔

2 from sklearn import model_selection,preprocessing

3 import pandas as pd

4 class KNN(object):

5 def __init__(self,K=1,p=2):

6 self.kdtree= KDTree()

7 self.K =K

8 self.p=p

9 def fit(self,x_data,y_data):

10 self.kdtree.build_tree(x_data,y_data)

11 def predict(self,pre_x,label):

12 if ‘class‘ in label:

13 return self.kdtree.predict_classification(pre_x,K=self.K)

14 else :

15 return self.kdtree.predict_regression(pre_x,K=self.K)

16 def test_check(self,test_xx,test_y):

17 # only support classification problem

18 correct =0

19 for i,xi in enumerate(test_xx):

20 pre_y = self.kdtree.predict_classification(Xi=xi,K=self.K)

21 if pre_y == test_y[i]:

22 correct+=1

23 return correct/len(test_y)

24

25

26 file_path = "datingTestSet.txt"

27 data = pd.read_csv(file_path, sep="\t",header=None)

28 XX = data.iloc[:,:-1].values

29 Y = data.iloc[:,-1].values

30 train_xx , test_xx, train_y,test_y = model_selection.train_test_split(XX,Y,test_size= 0.2,random_state=123,shuffle=True)

31 knn=KNN(K=5,p=2)

32 knn.fit(train_xx,train_y)

33 acc = knn.test_check(test_xx,test_y)

34 print("No Standard Scale Accuracy: ",acc)

35 # 考虑到数据中不同维度之间的数值相差过大,进行特征缩放

36 scaler = preprocessing.StandardScaler()

37 # 计算均值和标准差只能用训练集的数据

38 scaler.fit(train_xx)

39 stand_train_xx = scaler.transform(train_xx)

40 stand_test_xx = scaler.transform(test_xx)

41 new_knn = KNN(K=5,p=2)

42 new_knn.fit(stand_train_xx,train_y)

43 new_acc = new_knn.test_check(stand_test_xx,test_y)

44 print("Standard Scale Accuracy: ",new_acc)

原文:https://www.cnblogs.com/ISGuXing/p/13770130.html