pip install requests

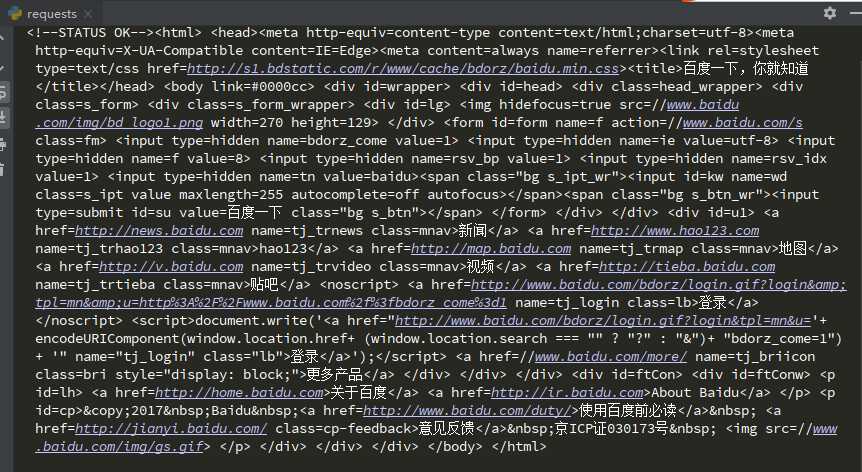

用requests获取百度html代码

1 import requests 2 3 url = ‘http://www.baidu.com‘ 4 5 response = requests.get(url).content.decode() 6 7 print(response)

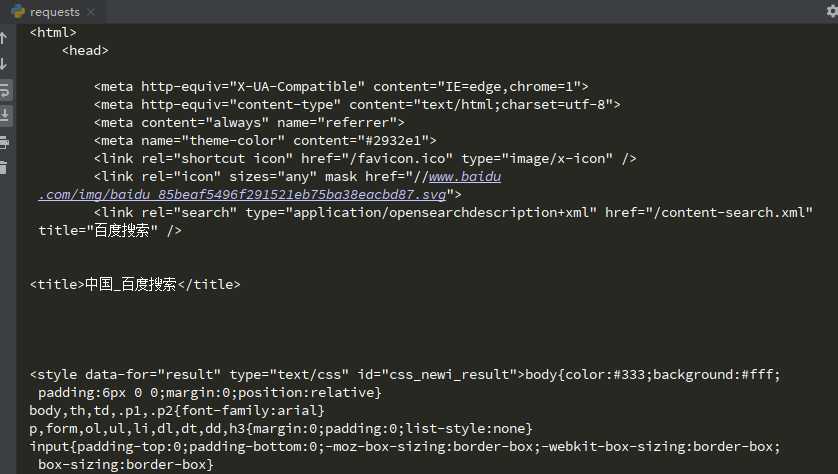

1 import requests 2 3 url = ‘http://www.baidu.com/s?‘ 4 5 headers = { 6 "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36" 7 } 8 9 wd = {"wd":"中国"} 10 11 response = requests.get(url,params=wd,headers=headers) 12 13 data = response.text # 表示返回一个字符串形式数据 14 15 data2 = response.content # 表示返回一个二进制形式数据 16 17 print(data)

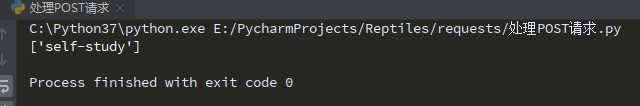

使用上一章正则表达式获取有道翻译的post请求

1 import requests 2 import re 3 4 #构造请求头信息 5 header={ 6 "User-Agent":"Mozilla/5.0 (Linux; U; An 7 droid 8.1.0; zh-cn; BLA-AL00 Build/HUAW 8 EIBLA-AL00) AppleWebKit/537.36 (KHTML, l 9 ike Gecko) Version/4.0 Chrome/57.0.2987.1310 2 MQQBrowser/8.9 Mobile Safari/537.36" 11 } 12 13 url="http://fanyi.youdao.com/translate?smartresult=dict&smartresult=rule" 14 15 key="自学" 16 17 #post请求需要提交的参数 18 formdata={ 19 "i":key, 20 "from":"AUTO", 21 "to":"AUTO", 22 "smartresult":"dict", 23 "client":"fanyideskweb", 24 "salt":"15503049709404", 25 "sign":"3da914b136a37f75501f7f31b11e75fb", 26 "ts":"1550304970940", 27 "bv":"ab57a166e6a56368c9f95952de6192b5", 28 "doctype":"json", 29 "version":"2.1", 30 "keyfrom":"fanyi.web", 31 "action":"FY_BY_REALTIME", 32 "typoResult":"false" 33 } 34 35 response = requests.post(url,headers=header,data=formdata) 36 37 data = response.json() 38 39 data2 = response.text 40 41 data3 = response.content 42 43 # 正则表达式,提取"tgt":"self-study"}]]}中间的变量 44 pat = r‘"tgt":"(.*?)"}]]}‘ 45 46 result = re.findall(pat,response.text) 47 48 print(result)

1 import requests 2 3 # 设置ip地址 4 # proxy = {"http":"http://代理IP地址:端口号"} 5 proxy = {"http":"http://118.113.247.26:9999"} 6 7 url = "http://www.baidu.com" 8 9 response = requests.get(url,proxies=proxy) 10 11 print(response)

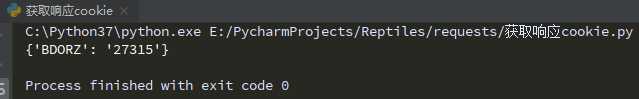

1 import requests 2 3 url = ‘http://www.baidu.com‘ 4 5 response = requests.get(url) 6 7 # 1、获取返回的cookiejar对象 8 cookiejar = response.cookies 9 10 # 2、将cookiejar转换成字典 11 cookiedict = requests.utils.dict_from_cookiejar(cookiejar) 12 13 print(cookiedict)

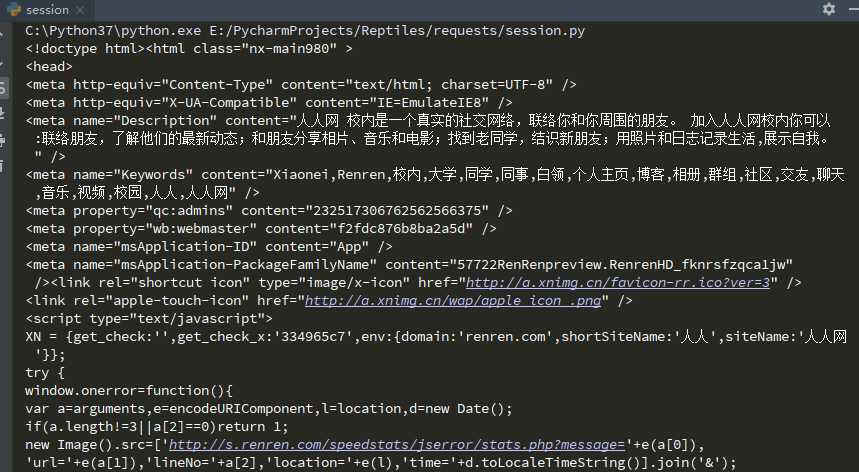

1 import requests 2 3 url = "http://www.renren.com/Plogin.do" 4 5 header={ 6 "User-Agent":"Mozilla/5.0 (Linux; U; An 7 droid 8.1.0; zh-cn; BLA-AL00 Build/HUAW 8 EIBLA-AL00) AppleWebKit/537.36 (KHTML, l 9 ike Gecko) Version/4.0 Chrome/57.0.2987.1310 2 MQQBrowser/8.9 Mobile Safari/537.36" 11 } 12 13 # 创建一个session对象 14 ses = requests.session() 15 16 # 构造登录需要的参数 17 data={"email":"binzi_chen@126.com","password":"5tgb^YHN"} 18 19 # 通过传递用户名密码得到cookie信息 20 ses.post(url,data=data) 21 22 # 请求需要的页面 23 response = ses.get("http://www.renren.com/880151237/profile") 24 25 print(response.text)

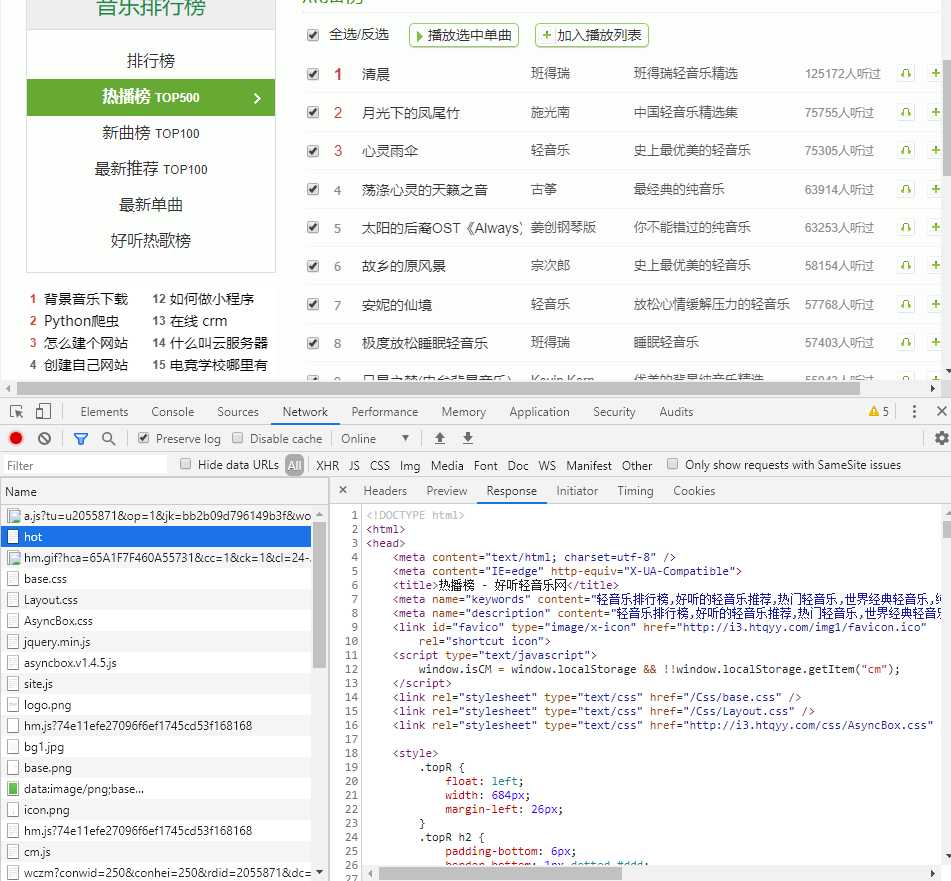

爬虫是为了破解只能下载两次的限制

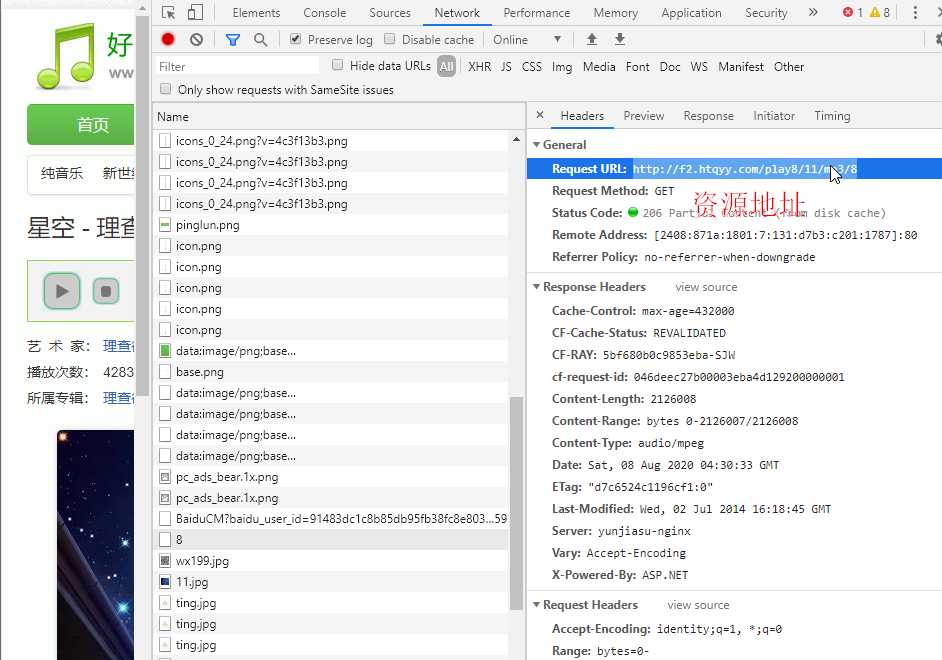

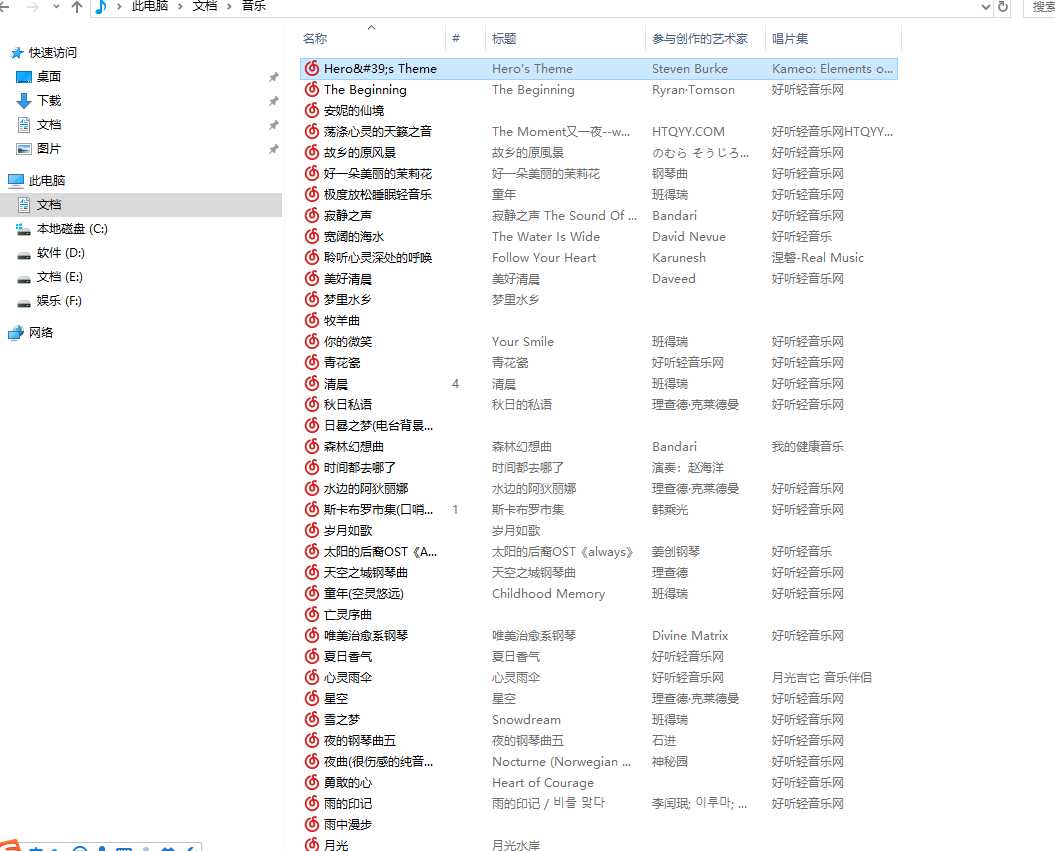

1 import re # 引入正则表达式库 2 import requests 3 import time 4 5 # http://www.htqyy.com/top/musicList/hot?pageIndex=1&pageSize=20 # 第一页 6 # http://www.htqyy.com/top/musicList/hot?pageIndex=1&pageSize=20 # 第二页 7 # http://www.htqyy.com/top/musicList/hot?pageIndex=2&pageSize=20 # 第三页 8 9 # 页码-1 10 11 # 歌曲url http://www.htyy.com/play/20 12 # 播放资源 http://f2.htqyy.com/play8/11/mp3/8 13 14 # page = int(input("请输入您要爬取的页数:")) 15 16 header = { 17 "Referer":"http://www.htqyy.com/top/hot" 18 } 19 20 songID = [] 21 songName = [] 22 23 for i in range(0,2): 24 url = "http://www.htqyy.com/top/musicList/hot?pageIndex="+ str(i)+"&pageSize=20" 25 26 # 获取音乐榜单的网页信息 27 html = requests.get(url,headers=header) 28 29 strr = html.text 30 31 # print(strr) 32 # class ="title" > < a href="/play/33" target="play" title="清晨" sid="33" > 清晨 < / a > < / span > 33 34 part1 = r‘title="(.*?)" sid‘ 35 part2 = r‘sid="(.*?)"‘ 36 37 38 idlist = re.findall(part2,strr) 39 titlelist = re.findall(part1,strr) 40 41 songID.extend(idlist) # extend()把两个列表合成一个列表 42 songName.extend(titlelist) 43 44 print("歌曲列表:",songName) 45 print("歌曲ID:",songID) 46 47 for i in range(0,len(songID)): 48 songUrl = "http://f2.htqyy.com/play8/" + str(songID[i]) + "/mp3/8" 49 song = songName[i] 50 51 data = requests.get(songUrl).content 52 53 with open("D:\\Documents\\Music\\{}.mp3".format(song),"wb") as f: 54 f.write(data) 55 time.sleep(0.5)

原文:https://www.cnblogs.com/zibinchen/p/13457339.html