import numpy as np def loadDataSet(): postingList=[[‘my‘, ‘dog‘, ‘has‘, ‘flea‘, ‘problems‘, ‘help‘, ‘please‘], #每一行词表,代表一个文档 [‘maybe‘, ‘not‘, ‘take‘, ‘him‘, ‘to‘, ‘dog‘, ‘park‘, ‘stupid‘], [‘my‘, ‘dalmation‘, ‘is‘, ‘so‘, ‘cute‘, ‘I‘, ‘love‘, ‘him‘], [‘stop‘, ‘posting‘, ‘stupid‘, ‘worthless‘, ‘garbage‘], [‘mr‘, ‘licks‘, ‘ate‘, ‘my‘, ‘steak‘, ‘how‘, ‘to‘, ‘stop‘, ‘him‘], [‘quit‘, ‘buying‘, ‘worthless‘, ‘dog‘, ‘food‘, ‘stupid‘]] classVec = [0,1,0,1,0,1] #标签值 1 is abusive, 0 not return postingList,classVec

def createVocabList(dataSet): #创建词汇列表 将多个文档单词归为一个 VocabSet = set([]) #使用集合,方便去重 for document in dataSet: VocabSet = VocabSet | set(document) #保持同类型 --- 这里并集导致随机序列出现,所以在下面进行排序,方便对比。 但是单独测试得时候并没有出现随机现象,多次调试,结果唯一,这里每次都不唯一 VocabList = list(VocabSet) VocabList.sort() #列表数据排序 return VocabList

def WordSet2Vec(VocaList,inputSet): #根据我们上面得到的全部词汇列表,将我们输入得inputSet文档向量化 returnVec = [0]*len(VocaList) for word in inputSet: if word in VocaList: returnVec[VocaList.index(word)] = 1 else: print("the word: %s is not in my Vocabulary!"%word) return returnVec

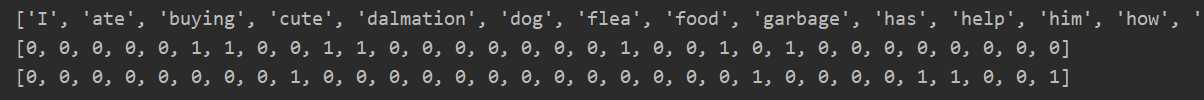

DocData,classVec = loadDataSet() voclist = createVocabList(DocData) print(voclist) print(WordSet2Vec(voclist,DocData[0])) print(WordSet2Vec(voclist,DocData[3]))

def trainNB0(trainMatrix,trainCategory): #训练朴素贝叶斯模型 传入numpy数组类型 trainMatrix所有文档词汇向量矩阵(m*n矩阵 m个文档,每个文档都是n列,代表词汇向量大小),trainCategory每篇文档得标签 numTrainDoc = len(trainMatrix) #文档数量 pC1 = np.sum(trainCategory)/numTrainDoc #p(c1)得概率 p(c0)=1-p(c1) wordVecNum = len(trainMatrix[0]) #因为每个文档转换为词汇向量后都是一样得长度 #初始化p1,p0概率向量 p1VecNum,p0VecNum = np.zeros(wordVecNum),np.zeros(wordVecNum) p1Sum,p0Sum = 0,0 #循环每一个文档 for i in range(numTrainDoc): if trainCategory[i] == 1: #侮辱性文档 p1VecNum += trainMatrix[i] #统计侮辱性文档中,每个单词出现频率 p1Sum += np.sum(trainMatrix[i]) #统计侮辱性文档中出现得全部单词数 每个单词出现概率就是单词出现频率/全部单词 else: #正常文档 p0VecNum += trainMatrix[i] p0Sum += np.sum(trainMatrix[i]) p1Vect = p1VecNum / p1Sum #统计各类文档中的单词出现频率 p0Vect = p0VecNum / p0Sum return p1Vect,p0Vect,pC1

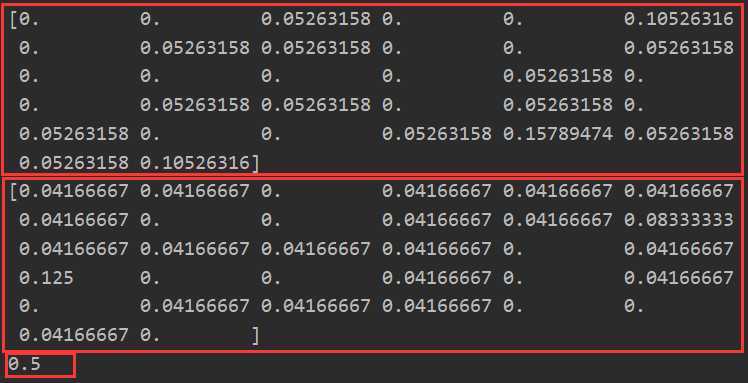

DocData,classVec = loadDataSet() voclist = createVocabList(DocData) #获取全部文档词汇向量矩阵 trainMulList = [] for doc in DocData: trainMulList.append(WordSet2Vec(voclist,doc)) #将我们需要的词汇列表和标签列表转为numpy.ndarray类型 trainMat = np.array(trainMulList) trainClassVec = np.array(classVec) #获取训练模型 p1vec,p0vec,pc1 = trainNB0(trainMat,trainClassVec) print(p1vec) print(p0vec) print(pc1)

import numpy as np def loadDataSet(): postingList=[[‘my‘, ‘dog‘, ‘has‘, ‘flea‘, ‘problems‘, ‘help‘, ‘please‘], #每一行词表,代表一个文档 [‘maybe‘, ‘not‘, ‘take‘, ‘him‘, ‘to‘, ‘dog‘, ‘park‘, ‘stupid‘], [‘my‘, ‘dalmation‘, ‘is‘, ‘so‘, ‘cute‘, ‘I‘, ‘love‘, ‘him‘], [‘stop‘, ‘posting‘, ‘stupid‘, ‘worthless‘, ‘garbage‘], [‘mr‘, ‘licks‘, ‘ate‘, ‘my‘, ‘steak‘, ‘how‘, ‘to‘, ‘stop‘, ‘him‘], [‘quit‘, ‘buying‘, ‘worthless‘, ‘dog‘, ‘food‘, ‘stupid‘]] classVec = [0,1,0,1,0,1] #标签值 1 is abusive, 0 not return postingList,classVec def createVocabList(dataSet): #创建词汇列表 将多个文档单词归为一个 VocabSet = set([]) #使用集合,方便去重 for document in dataSet: VocabSet = VocabSet | set(document) #保持同类型 VocabList = list(VocabSet) VocabList.sort() return VocabList def WordSet2Vec(VocaList,inputSet): #根据我们上面得到的全部词汇列表,将我们输入得inputSet文档向量化 returnVec = [0]*len(VocaList) for word in inputSet: if word in VocaList: returnVec[VocaList.index(word)] = 1 else: print("the word: %s is not in my Vocabulary!"%word) return returnVec def trainNB0(trainMatrix,trainCategory): #训练朴素贝叶斯模型 传入numpy数组类型 trainMatrix所有文档词汇向量矩阵(m*n矩阵 m个文档,每个文档都是n列,代表词汇向量大小),trainCategory每篇文档得标签 numTrainDoc = len(trainMatrix) #文档数量 pC1 = np.sum(trainCategory)/numTrainDoc #p(c1)得概率 p(c0)=1-p(c1) wordVecNum = len(trainMatrix[0]) #因为每个文档转换为词汇向量后都是一样得长度 #初始化p1,p0概率向量 p1VecNum,p0VecNum = np.zeros(wordVecNum),np.zeros(wordVecNum) p1Sum,p0Sum = 0,0 #循环每一个文档 for i in range(numTrainDoc): if trainCategory[i] == 1: #侮辱性文档 p1VecNum += trainMatrix[i] #统计侮辱性文档中,每个单词出现频率 p1Sum += np.sum(trainMatrix[i]) #统计侮辱性文档中出现得全部单词数 每个单词出现概率就是单词出现频率/全部单词 else: #正常文档 p0VecNum += trainMatrix[i] p0Sum += np.sum(trainMatrix[i]) p1Vect = p1VecNum / p1Sum #统计各类文档中的单词出现频率 p0Vect = p0VecNum / p0Sum return p1Vect,p0Vect,pC1 DocData,classVec = loadDataSet() voclist = createVocabList(DocData) #获取全部文档词汇向量矩阵 trainMulList = [] for doc in DocData: trainMulList.append(WordSet2Vec(voclist,doc)) #将我们需要的词汇列表和标签列表转为numpy.ndarray类型 trainMat = np.array(trainMulList) trainClassVec = np.array(classVec) #获取训练模型 p1vec,p0vec,pc1 = trainNB0(trainMat,trainClassVec) print(p1vec) print(p0vec) print(pc1)

def trainNB0(trainMatrix,trainCategory): #训练朴素贝叶斯模型 传入numpy数组类型 trainMatrix所有文档词汇向量矩阵(m*n矩阵 m个文档,每个文档都是n列,代表词汇向量大小),trainCategory每篇文档得标签 numTrainDoc = len(trainMatrix) #文档数量 pC1 = np.sum(trainCategory)/numTrainDoc #p(c1)得概率 p(c0)=1-p(c1) wordVecNum = len(trainMatrix[0]) #因为每个文档转换为词汇向量后都是一样得长度 #初始化p1,p0概率向量---改进为拉普拉斯平滑 p1VecNum,p0VecNum = np.ones(wordVecNum),np.ones(wordVecNum) p1Sum,p0Sum = 2.0,2.0 #N*1 N表示分类数 #循环每一个文档 for i in range(numTrainDoc): if trainCategory[i] == 1: #侮辱性文档 p1VecNum += trainMatrix[i] #统计侮辱性文档中,每个单词出现频率 p1Sum += np.sum(trainMatrix[i]) #统计侮辱性文档中出现得全部单词数 每个单词出现概率就是单词出现频率/全部单词 else: #正常文档 p0VecNum += trainMatrix[i] p0Sum += np.sum(trainMatrix[i]) p1Vect = np.log(p1VecNum / p1Sum) #统计各类文档中的单词出现频率 p0Vect = np.log(p0VecNum / p0Sum) #使用对数避免下溢 return p1Vect,p0Vect,pC1

def classifyNB(testVec,p0Vec,p1Vec,pC1): p1 = sum(testVec*p1Vec)+np.log(pC1) #使用对数之后变为求和 p0 = sum(testVec*p0Vec)+np.log(1-pC1) if p1 > p0: return 1 else: return 0

DocData,classVec = loadDataSet() voclist = createVocabList(DocData) #获取全部文档词汇向量矩阵 trainMulList = [] for doc in DocData: trainMulList.append(WordSet2Vec(voclist,doc)) #将我们需要的词汇列表和标签列表转为numpy.ndarray类型 trainMat = np.array(trainMulList) trainClassVec = np.array(classVec) #获取训练模型 p1vec,p0vec,pc1 = trainNB0(trainMat,trainClassVec) #设置测试集进行测试 testEntry01 = ["love","my","dalmation"] VecEntry01 = WordSet2Vec(voclist,testEntry01) clf = classifyNB(VecEntry01,p0vec,p1vec,pc1) print(clf) testEntry02 = ["stupid","garbage"] VecEntry02 = WordSet2Vec(voclist,testEntry02) clf = classifyNB(VecEntry02,p0vec,p1vec,pc1) print(clf)

原文:https://www.cnblogs.com/ssyfj/p/13251944.html