活体检测有很多方法:眨眼检测,张嘴检测,摇头检测

这里举例眨眼检测算法。

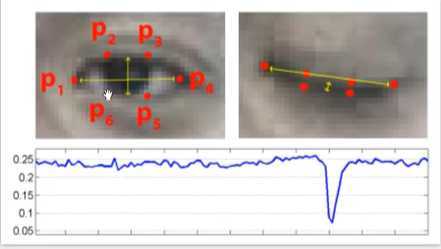

眨眼检测算法:利用眼睛纵横比(EAR, eyes aspect ratio) 通过计算这个EAR的数值,我们可以判断眼睛是张开还是闭合的,从而检测眨眼动作。

EAR = (||p2-p6|| +||p3-p5||) ?( 2 * ||p1-p4||)

代码实现:

from scipy.spatial import distance

import dlib

import cv2

from imutils import face_utils

def eye_aspect_ratio(eye):

‘‘‘

计算EAR值

:param eye: 眼部特征点数组

:return :EAR值

‘‘‘

A = distance.euclidean(eye[1], eye[5])

B = distance.euclidean(eye[2], eye[4])

C = distance.euclidean(eye[0], eye[3])

return (A + B) / (2.0 * C)

#通过dlib得到一个人脸检测器

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(‘libs/shape_predictor_68_face_landmarks.dat‘) #括号中将训练文件的路径传入

#设置眼睛纵横比的阈值

EAR_THRESH = 0.3

#我们假定连续三帧以上EAR的值都小于阈值才预设是产生了眨眼操作

EAR_CONSEC_FRAMES = 3

# 人脸特征点中对应眼睛那几个特征点的序号需要定义一下

RIGHT_EYE_START = 37 - 1

RIGHT_EYE_END = 42 - 1

LEFT_EYE_START = 43 - 1

LEFT_EYE_END = 48 - 1

frame_counter = 0 #连续帧的计数

blink_counter = 0 #眨眼的计数

#开始调用摄像头

cap = cv2.VideoCaootuer(0)

while True:

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2BRAY) #转化成灰度图像

rects = detector(gray, 1) #人脸检测

if len(rects) > 0:

shape = predictor(gray, rects[0]) #检测特征点

points = face_utils.shape_to_np(shape)

leftEye = points[LEFT_EYE_START:LEFT_EYE_END + 1] #取出左眼的特征点

rightEye = points[RIGHT_EYE_START:RIGHT_EYE_END + 1]

leftEAR = eye_aspect_ratio(leftEye)

rightEAR = eye_aspect_ratdio(rightEye) #计算左右眼的EAR值

#求左右眼EAR的平均值

ear = (leftEAR + rightEAR) / 2.0

#实际计算时,这两步非必需

#寻找左右眼轮廓

leftEyeHull =cv2.convexHull(leftEye)

rightEyeHull = cv2.convexHull(rightEye)

#绘制左右眼轮廓

cv2.drawContours(frame, [leftEyeHull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [rightEyeHull], -1, (0, 255, 0), 1)

#如果EAR小于阈值,开始计算连续帧

if ear < EAR_THRESH:

frame_counter += 1

else:

if frame_counter >= EAR_CONSEC_THRESH:

print("眨眼检测成功,请进入。")

blink_counter += 1

break

frame_counter = 0

cv2.imshow(‘window‘, frame)

if cv2.waitKey(1) & 0xFF == ord(‘q‘):

break

cap.realease()

cv2.destoryAllWindows()

结果:

原文:https://www.cnblogs.com/isadoraytwwt/p/12906365.html