什么是glusterfs

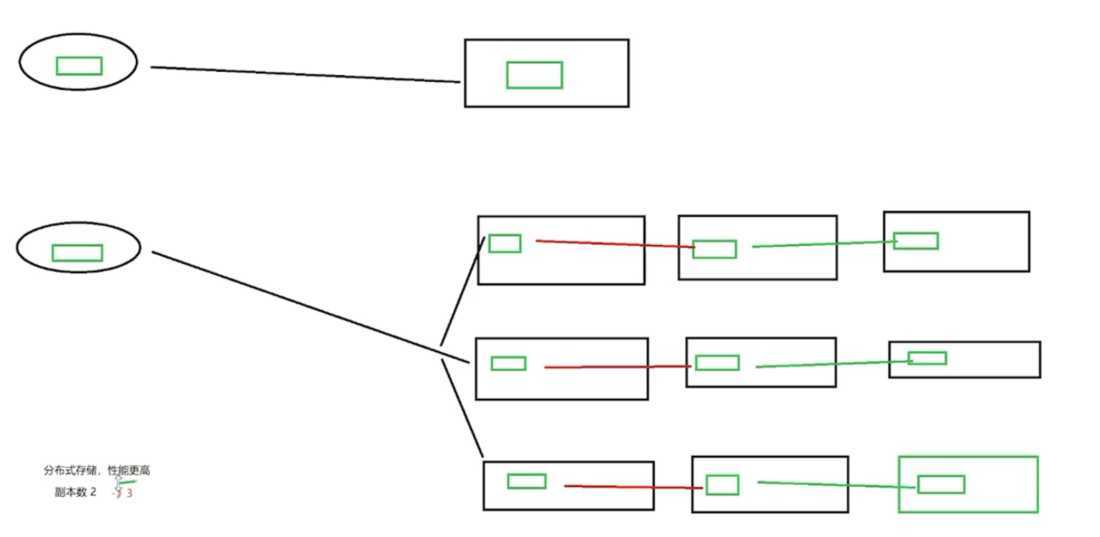

Glusterfs是一个开源分布式文件系统,具有强大的横向扩展能力,可支持数PB存储容量和数千客户端,通过网络互联成一个并行的网络文件系统。具有可扩展性、高性能、高可用性等特点。

相对于nfs

nfs痛点:单点故障 不支持扩容 并且高可用都有一定时间的中断 只能说是伪高可用

总的来说 glusterfs的分布式复制卷模式 相当于raid10

下面做3副本的存储 单存储为20g

所有节点:

yum install centos-release-gluster6.noarch -y yum install install glusterfs-server -y systemctl start glusterd.service systemctl enable glusterd.service

所有节点添加3块硬盘 扫描 挂载

echo ‘- - -‘ > /sys/class/scsi_host/host0/scan echo ‘- - -‘ > /sys/class/scsi_host/host1/scan echo ‘- - -‘ > /sys/class/scsi_host/host2/scan mkfs.xfs /dev/sdb mkfs.xfs /dev/sdc mkfs.xfs /dev/sdd mkdir -p /gfs/test1 mkdir -p /gfs/test2 mkdir -p /gfs/test3 mount /dev/sdb /gfs/test1 mount /dev/sdc /gfs/test2 mount /dev/sdd /gfs/test3

添加存储资源池

[root@k8s-master ~]# gluster pool list UUID Hostname State aa3993e8-9ef4-4449-b88c-c465e71f674e localhost Connected [root@k8s-master ~]# gluster peer probe k8s-node1 peer probe: success. [root@k8s-master ~]# gluster peer probe k8s-node2 peer probe: success. [root@k8s-master ~]# gluster pool list UUID Hostname State 63138673-89a5-4000-ae61-3a9649b696ab 192.168.100.15 Connected 6b86967b-a68e-40c8-aeaf-e93ab032de4b 192.168.100.16 Connected aa3993e8-9ef4-4449-b88c-c465e71f674e localhost Connected

创建分布式卷

gluster volume create goose replica 3 192.168.100.14:/gfs/test1 192.168.100.15:/gfs/test1 192.168.100.16:/gfs/test1 192.168.100.14:/gfs/test2 192.168.100.15:/gfs/test2 192.168.100.16:/gfs/test2 192.168.100.14:/gfs/test3 192.168.100.15:/gfs/test3 192.168.100.16:/gfs/test3 force

说明 glusterfs支持多种类型 默认情况下为分布式型 force:强制 为了方便区分 所以使用的是挂载的名字 需要使用这个参数才可以

第一行 这三个是一个相互复制的节点 其他同

启动卷

gluster volume start goose

查看卷

gluster volume info goose [root@k8s-master ~]# gluster volume info goose Volume Name: goose Type: Distributed-eplicate Volume ID: acb3f994-b024-4420-8028-f1c41d15b3b5 Status: Started Snapshot Count: 0 Number of Bricks: 3 x 3 = 9 Transport-type: tcp Bricks: Brick1: 192.168.100.14:/gfs/test1 Brick2: 192.168.100.15:/gfs/test1 Brick3: 192.168.100.16:/gfs/test1 Brick4: 192.168.100.14:/gfs/test2 Brick5: 192.168.100.15:/gfs/test2 Brick6: 192.168.100.16:/gfs/test2 Brick7: 192.168.100.14:/gfs/test3 Brick8: 192.168.100.15:/gfs/test3 Brick9: 192.168.100.16:/gfs/test3 Options Reconfigured: transport.address-family: inet nfs.disable: on performance.client-io-threads: off

挂载卷

glusterfs是一个cs架构 客户端只要装 如下包即可 为了方便我直接装了服务端也是一样的 这里写任何一个节点都可以 mount -t glusterfs 192.168.100.14:goose /mnt

测试:

[root@k8s-node2 gfs]# find /etc -maxdepth 1 -type f |xargs -i cp {} /mnt

[root@k8s-node2 gfs]# tree /gfs/

/gfs/

├── test1

│ ├── adjtime

│ ├── aliases.db

│ ├── anacrontab

│ ├── bashrc

│ ├── centos-release

│ ├── DIR_COLORS.256color

│ ├── dnsmasq.conf

│ ├── dracut.conf

│ ├── environment

│ ├── group

│ ├── hostname

│ ├── hosts.allow

│ ├── hosts.deny

│ ├── inittab

│ ├── inputrc

│ ├── issue.net

│ ├── kdump.conf

│ ├── krb5.conf

│ ├── ld.so.cache

│ ├── ld.so.conf

│ ├── libaudit.conf

│ ├── logrotate.conf

│ ├── magic

│ ├── makedumpfile.conf.sample

│ ├── mke2fs.conf

│ ├── networks

│ ├── nfs.conf

│ ├── nsswitch.conf

│ ├── oci-register-machine.conf

│ ├── passwd-

│ ├── printcap

│ ├── protocols

│ ├── rsyslog.conf

│ ├── securetty

│ ├── sestatus.conf

│ ├── shells

│ ├── sudo-ldap.conf

│ ├── sysctl.conf

│ └── yum.conf

├── test2

│ ├── aliases

│ ├── cron.deny

│ ├── crontab

│ ├── DIR_COLORS

│ ├── ethertypes

│ ├── exports

│ ├── fstab

│ ├── group-

│ ├── gshadow

│ ├── host.conf

│ ├── idmapd.conf

│ ├── issue

│ ├── libuser.conf

│ ├── login.defs

│ ├── machine-id

│ ├── nfsmount.conf

│ ├── nsswitch.conf.bak

│ ├── oci-umount.conf

│ ├── os-release

│ ├── passwd

│ ├── request-key.conf

│ ├── resolv.conf

│ ├── services

│ ├── shadow

│ ├── shadow-

│ ├── statetab

│ ├── sudo.conf

│ └── virc

└── test3

├── asound.conf

├── centos-release-upstream

├── crypttab

├── csh.cshrc

├── csh.login

├── DIR_COLORS.lightbgcolor

├── e2fsck.conf

├── filesystems

├── GREP_COLORS

├── gshadow-

├── hosts

├── locale.conf

├── man_db.conf

├── motd

├── my.cnf

├── netconfig

├── profile

├── rpc

├── rwtab

├── sudoers

├── system-release-cpe

├── tcsd.conf

└── vconsole.conf

3 directories, 90 files

[root@k8s-node1 ~]# tree /gfs/

/gfs/

├── test1

│ ├── adjtime

│ ├── aliases.db

│ ├── anacrontab

│ ├── bashrc

│ ├── centos-release

│ ├── DIR_COLORS.256color

│ ├── dnsmasq.conf

│ ├── dracut.conf

│ ├── environment

│ ├── group

│ ├── hostname

│ ├── hosts.allow

│ ├── hosts.deny

│ ├── inittab

│ ├── inputrc

│ ├── issue.net

│ ├── kdump.conf

│ ├── krb5.conf

│ ├── ld.so.cache

│ ├── ld.so.conf

│ ├── libaudit.conf

│ ├── logrotate.conf

│ ├── magic

│ ├── makedumpfile.conf.sample

│ ├── mke2fs.conf

│ ├── networks

│ ├── nfs.conf

│ ├── nsswitch.conf

│ ├── oci-register-machine.conf

│ ├── passwd-

│ ├── printcap

│ ├── protocols

│ ├── rsyslog.conf

│ ├── securetty

│ ├── sestatus.conf

│ ├── shells

│ ├── sudo-ldap.conf

│ ├── sysctl.conf

│ └── yum.conf

├── test2

│ ├── aliases

│ ├── cron.deny

│ ├── crontab

│ ├── DIR_COLORS

│ ├── ethertypes

│ ├── exports

│ ├── fstab

│ ├── group-

│ ├── gshadow

│ ├── host.conf

│ ├── idmapd.conf

│ ├── issue

│ ├── libuser.conf

│ ├── login.defs

│ ├── machine-id

│ ├── nfsmount.conf

│ ├── nsswitch.conf.bak

│ ├── oci-umount.conf

│ ├── os-release

│ ├── passwd

│ ├── request-key.conf

│ ├── resolv.conf

│ ├── services

│ ├── shadow

│ ├── shadow-

│ ├── statetab

│ ├── sudo.conf

│ └── virc

└── test3

├── asound.conf

├── centos-release-upstream

├── crypttab

├── csh.cshrc

├── csh.login

├── DIR_COLORS.lightbgcolor

├── e2fsck.conf

├── filesystems

├── GREP_COLORS

├── gshadow-

├── hosts

├── locale.conf

├── man_db.conf

├── motd

├── my.cnf

├── netconfig

├── profile

├── rpc

├── rwtab

├── sudoers

├── system-release-cpe

├── tcsd.conf

└── vconsole.conf

3 directories, 90 files

会发现所有节点的test1都相同 test2都相同.....

扩容

glusterfs支持热扩容 因为副本为3 扩容需要3个盘

glusterfs支持热扩容 因为副本为3 扩容需要3个盘 gluster volume add-brick goose k8s-node2:/gfs/test4 k8s-node2:/gfs/test4 k8s-master:/gfs/test4 force

命令总结

[root@k8s-master ~]# gluster help peer 节点操作 pool 资源池查看 volume 卷操作 [root@k8s-master ~]# gluster peer detach 移除节点 probe 添加节点 status 状态 [root@k8s-master ~]# gluster pool list 查看节点 [root@k8s-master ~]#gluster volume add-brick 添加 delete 删除 .....

glusterfs对接k8s

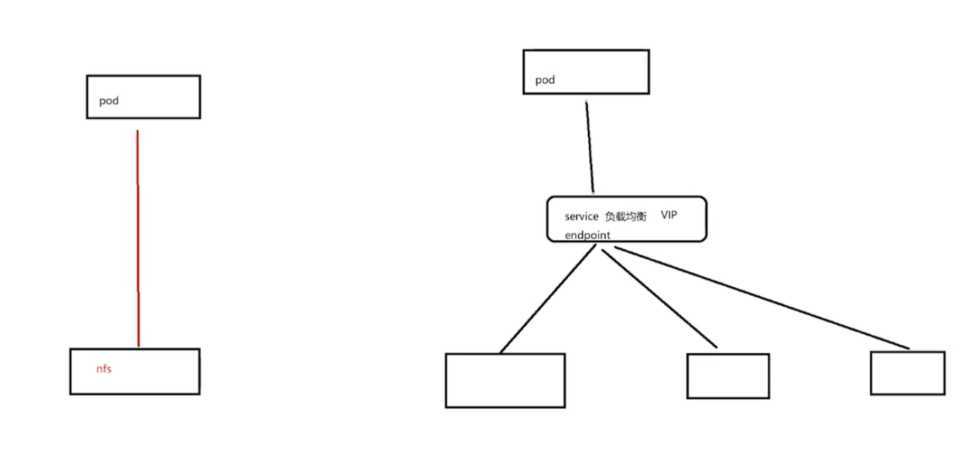

endpoint资源:

svc在创建的时候 会自动创建一个同名的endpoint 指向pod

[root@k8s-master ~]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE LABELS mysql-3x84m 1/1 Running 1 1d 172.18.28.3 k8s-node2 app=mysql myweb-d68gv 1/1 Running 1 1d 172.18.28.2 k8s-node2 app=myweb test 2/2 Running 30 1d 172.18.14.2 k8s-node1 app=web [root@k8s-master ~]# kubectl get endpoints NAME ENDPOINTS AGE kubernetes 192.168.200.14:6443 1d mysql 172.18.28.3:3306 1d myweb 172.18.28.2:8080 1d [root@k8s-master ~]# kubectl get svc NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes 10.254.0.1 <none> 443/TCP 1d mysql 10.254.45.227 <none> 3306/TCP 1d myweb 10.254.217.83 <nodes> 8080:30008/TCP 1d

让k8s内部直接访问k8s外部服务 为外部服务手动创建endpoint和svc 又叫k8s映射

不能单独使用单个glusterfs 这样单个节点挂了就用不了了 所以需要用svc的负载均衡功能 通过endpoint指向3个节点(创建的时候卷类型为glusterfs 会自动挂载的)

下面创建连接 pv与pvc的就不做了...

1.创建goose命名空间(default有这些服务了,为了方便创建一个)

[root@k8s-master ~]# kubectl create namespace goose

2.创建endpoint 注意名字要和后面的svc一样 千万不要有标签选择器

vi glusterfs-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs

namespace: goose

subsets:

- addresses:

- ip: 192.168.100.14

- ip: 192.168.100.15

- ip: 192.168.100.16

ports:

- port: 49152

protocol: TCP

[root@k8s-master pvc]# kubectl get endpoints -n goose

NAME ENDPOINTS AGE

glusterfs 192.168.100.14:49152,192.168.100.15:49152,192.168.100.16:49152 27m

mysql 172.18.14.3:3306 20m

myweb 172.18.28.4:8080 15m

3.创建svc 通过名字和endpoint相连

vi glusterfs-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: glusterfs

namespace: goose

spec:

ports:

- port: 49152

protocol: TCP

targetPort: 49152

type: ClusterIP

4.创建rc资源 其他资源就加一个namespace就行,不在列举,参考前面实验 mysql需要更改卷类型

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

namespace: goose

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 192.168.100.14:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: ‘123456‘

volumeMounts:

- name: data

mountPath: /var/lib/mysql

volumes:

- name: data

glusterfs:

path: goose

endpoints: glusterfs

任意节点挂载即可看到数据 并且在pod所在节点上也自动挂载了

[root@k8s-node1 test2]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 36G 2.3G 34G 7% /

devtmpfs 1.3G 0 1.3G 0% /dev

tmpfs 1.3G 0 1.3G 0% /dev/shm

tmpfs 1.3G 9.2M 1.3G 1% /run

tmpfs 1.3G 0 1.3G 0% /sys/fs/cgroup

/dev/sda1 497M 114M 384M 23% /boot

overlay 36G 2.3G 34G 7% /var/lib/docker/overlay2/2eadc0c7b519ce60956ac109f5f1c22171b55c9775b0806bc88fb905f795167b/merged

shm 64M 0 64M 0% /var/lib/docker/containers/1109669df90924295ca2e1ed8f83020a897c4af2219aab337eb20669e9a5db31/shm

overlay 36G 2.3G 34G 7% /var/lib/docker/overlay2/db5fa43b3ff0eaf59b7d3634eae51af1d32515875f38ce9c2d08f228588261f6/merged

tmpfs 266M 0 266M 0% /run/user/0

/dev/sdb 20G 95M 20G 1% /gfs/test1

192.168.100.14:goose(这)56G 2.9G 53G 6% /var/lib/kubelet/pods/37604daa-782f-11ea-8f95-000c290a1e33/volumes/kubernetes.io~glusterfs/data

overlay 36G 2.3G 34G 7% /var/lib/docker/overlay2/41c92f70d356ffff554e84b71c8afa5e848d5d8b29c247c3009aeeab556ef8d2/merged

shm 64M 0 64M 0% /var/lib/docker/containers/9bda30c9ade750532e5a1b2325d44c68f377d6813e84da7c8d4b27160958074d/shm

overlay 36G 2.3G 34G 7% /var/lib/docker/overlay2/70c5cfbd9e483f00b2edba2b66aab6c2a2426f11ad86d140678436e329332989/merged

overlay 36G 2.3G 34G 7% /var/lib/docker/overlay2/f59c23528b64d7047ce001458dbd0873aaff06c79cf15bfc821da75ca816f83c/merged

[root@k8s-node2 mnt]# ls

auto.cnf ca.pem client-key.pem ibdata1 ib_logfile1 mysql private_key.pem server-cert.pem sys

ca-key.pem client-cert.pem ib_buffer_pool ib_logfile0 ibtmp1 performance_schema public_key.pem server-key.pem

[root@k8s-master pvc]# tree /gfs/

/gfs/

├── test1

│ ├── client-key.pem

│ ├── ib_buffer_pool

│ ├── ib_logfile1

│ ├── mysql

│ │ ├── columns_priv.frm

│ │ ├── columns_priv.MYI

│ │ ├── db.MYD

│ │ ├── func.MYD

│ │ ├── general_log.frm

│ │ ├── gtid_executed.frm

│ │ ├── innodb_index_stats.frm

│ │ ├── proc.frm

│ │ ├── procs_priv.frm

.......

原文:https://www.cnblogs.com/hsgoose/p/12845514.html