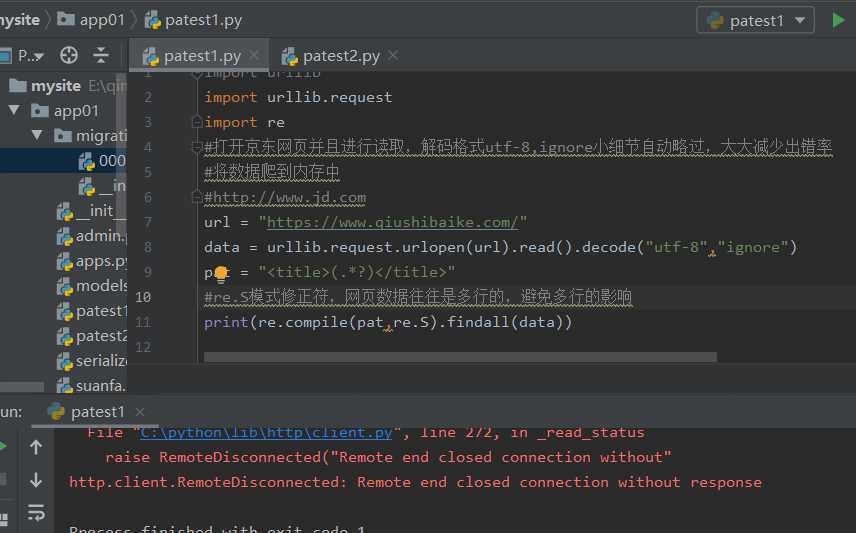

import urllib.request import re #ignore小细节自动略过,大大减少出错率 #将数据爬到内存中 #http://www.jd.com url = "https://www.qiushibaike.com/" data = urllib.request.urlopen(url).read().decode("utf-8","ignore") pat = "<title>(.*?)</title>" #re.S模式修正符,网页数据往往是多行的,避免多行的影响 print(re.compile(pat,re.S).findall(data))

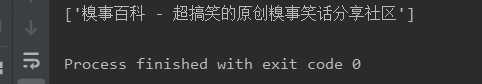

import urllib.request import re #浏览器伪装 #建立opener对象,opener可以进行设置 opener = urllib.request.build_opener() #构建元祖User-Agent,键值 UA = ("user-agent","Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36") #将其放入addheaders属性中 opener.addheaders = [UA] #将opener安装为全局 urllib.request.install_opener(opener) url = "http://www.qiushibaike.com" pat = "<title>(.*?)</title>" data = urllib.request.urlopen(url).read().decode("utf-8","ignore") print(re.compile(pat,re.S).findall(data))

原文:https://www.cnblogs.com/u-damowang1/p/12724245.html