spark 有哪些数据类型 https://spark.apache.org/docs/latest/sql-reference.html

Spark 数据类型

Spark SQL and DataFrames support the following data types:

ByteType: Represents 1-byte signed integer numbers. The range of numbers is from -128 to 127.ShortType: Represents 2-byte signed integer numbers. The range of numbers is from -32768 to 32767.IntegerType: Represents 4-byte signed integer numbers. The range of numbers is from -2147483648 to 2147483647.LongType: Represents 8-byte signed integer numbers. The range of numbers is from -9223372036854775808 to 9223372036854775807.FloatType: Represents 4-byte single-precision floating point numbers.DoubleType: Represents 8-byte double-precision floating point numbers.DecimalType: Represents arbitrary-precision signed decimal numbers. Backed internally by java.math.BigDecimal. A BigDecimal consists of an arbitrary precision integer unscaled value and a 32-bit integer scale.StringType: Represents character string values.BinaryType: Represents byte sequence values.BooleanType: Represents boolean values.TimestampType: Represents values comprising values of fields year, month, day, hour, minute, and second.DateType: Represents values comprising values of fields year, month, day.ArrayType(elementType, containsNull): Represents values comprising a sequence of elements with the type of elementType. containsNull is used to indicate if elements in a ArrayType value can have null values.MapType(keyType, valueType, valueContainsNull): Represents values comprising a set of key-value pairs. The data type of keys are described by keyType and the data type of values are described by valueType. For a MapType value, keys are not allowed to have null values. valueContainsNull is used to indicate if values of a MapType value can have null values.StructType(fields): Represents values with the structure described by a sequence of StructFields (fields).

StructField(name, dataType, nullable): Represents a field in a StructType. The name of a field is indicated by name. The data type of a field is indicated by dataType. nullable is used to indicate if values of this fields can have null values.对应的pyspark 数据类型在这里 pyspark.sql.types

一些常见的转化场景:

1. Converts a date/timestamp/string to a value of string, 转成的string 的格式用第二个参数指定

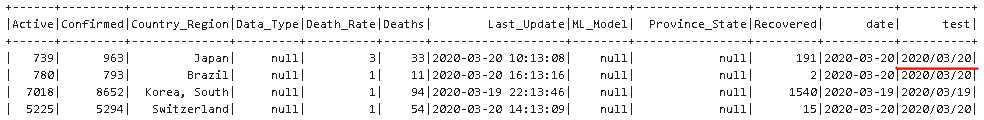

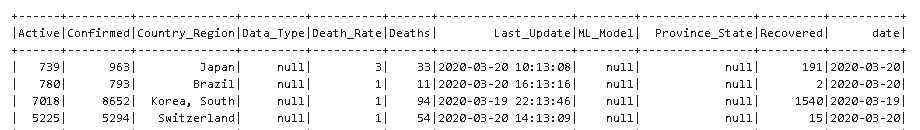

df.withColumn(‘test‘, F.date_format(col(‘Last_Update‘),"yyyy/MM/dd")).show()

2. 转成 string后,可以 cast 成你想要的类型,比如下面的 date 型

df = df.withColumn(‘date‘, F.date_format(col(‘Last_Update‘),"yyyy-MM-dd").alias(‘ts‘).cast("date"))

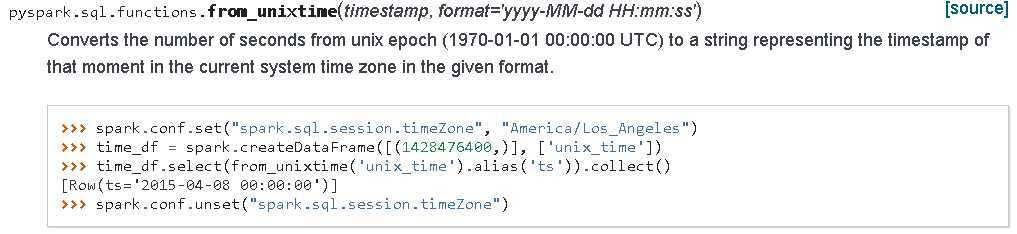

3. 把 timestamp 秒数(从1970年开始)转成日期格式 string

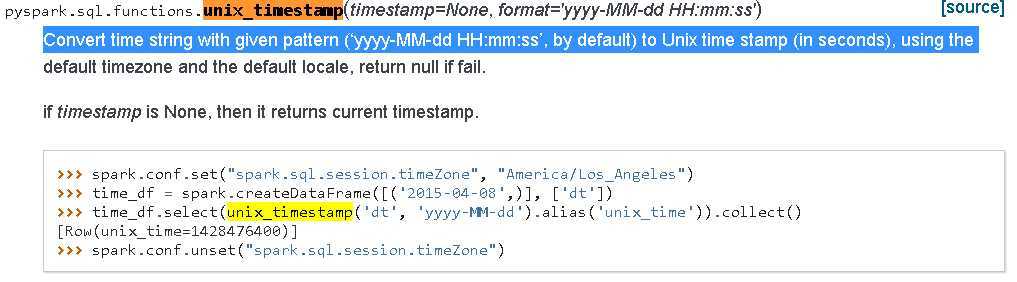

4. unix_timestamp 把 日期 String 转换成 timestamp 秒数,是上面操作的反操作

因为unix_timestamp 不考虑 ms ,如果一定要考虑ms可以用下面的方法

df1 = df.withColumn("unix_timestamp",F.unix_timestamp(df.TIME,‘dd-MMM-yyyy HH:mm:ss.SSS z‘) + F.substring(df.TIME,-7,3).cast(‘float‘)/1000)

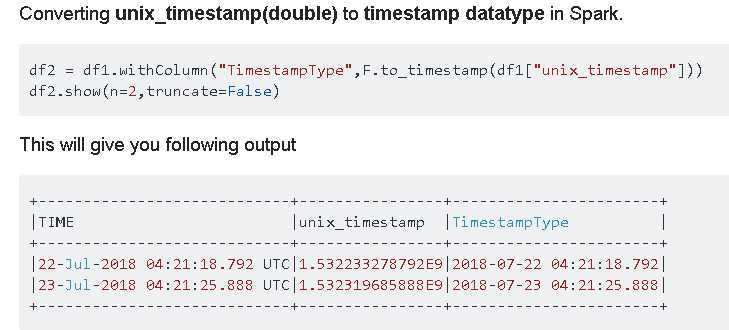

5. timestamp 秒数转换成 timestamp type, 可以用 F.to_timestamp

Ref:

原文:https://www.cnblogs.com/mashuai-191/p/12580628.html