prometheus相关的服务都部署在monitoring这个namespace下

创建namespace

$ cd /opt/k8s/prometheus

$ cat>1-namespace.yml<<EOF

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

EOF

创建prometheus对应的配置文件,利用kubernetes的ConfigMap

$ cd /opt/k8s/prometheus

$ cat>2-prom-cnfig.yml<<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: prom-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: ‘prometheus‘

static_configs:

- targets: [‘localhost:9090‘]

EOF

创建用来存储prometheus数据的pv、pvc(采用本地存储、可以通过搭建NFS、GlusterFs等分布式文件系统代替)

$ cd /opt/k8s/prometheus

$ cat>3-prom-pv.yml<<EOF

kind: PersistentVolume

apiVersion: v1

metadata:

namespace: monitoring

name: prometheus

labels:

type: local

app: prometheus

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /opt/k8s/prometheus/data

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: monitoring

name: prometheus-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

EOF

创建启动prometheus的文件,以deployment形式部署,外部访问通过NodePort类型的service

$ cd /opt/k8s/prometheus

$ cat>4-prometheus.yml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

replicas: 1

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: prom/prometheus:v2.16.0

args:

- ‘--config.file=/etc/prometheus/prometheus.yml‘

- ‘--storage.tsdb.path=/prometheus‘

- "--storage.tsdb.retention=7d"

- "--web.enable-lifecycle"

ports:

- containerPort: 9090

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data

- mountPath: "/etc/prometheus"

name: config

resources:

requests:

cpu: 500m

memory: 2Gi

limits:

cpu: 500m

memory: 2Gi

volumes:

- name: config

configMap:

name: prom-config

- name: data

persistentVolumeClaim:

claimName: prometheus-claim

---

apiVersion: v1

kind: Service

metadata:

namespace: monitoring

name: prometheus

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 9090

selector:

app: prometheus

EOF

启动Prometheus服务

$ cd /opt/k8s/prometheus

$ mkdir data & chmod -R 777 data

$ kubectl create -f 1-namespace.yml -f 2-prom-cnfig.yml -f 3-prom-pv.yml -f 4-prometheus.yml

查看组件状态,确保所有服务正常启动

$ kubectl get all -n monitoring

NAME READY STATUS RESTARTS AGE

pod/prometheus-57cf64764d-xqnvl 1/1 Running 0 51s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.254.209.164 <none> 9090:9090/TCP 51s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus 1/1 1 1 51s

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-57cf64764d 1 1 1 51s

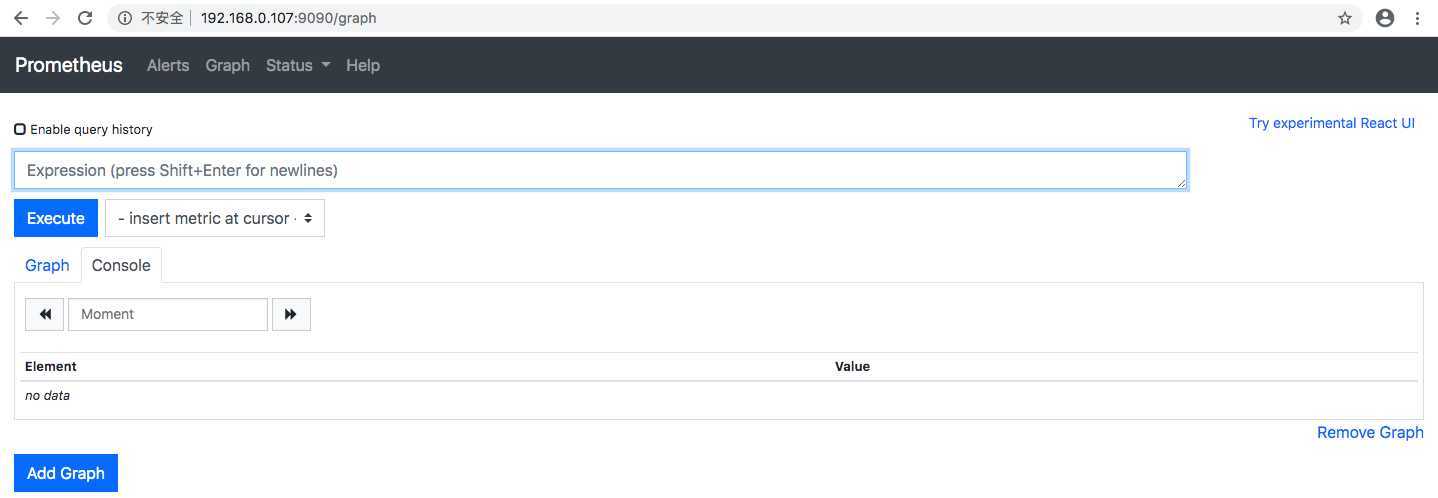

界面访问

本文采用自己部署组件的形式,看如何一步一步搭建一个监控平台。对于有快速搭建需求的,可以参考 kube-prometheus

因为要监控每一个节点,所以采用Daemonset控制器来部署node-exporter,在每个节点上都运行一个Pod

启动文件

$ cd /opt/k8s/prometheus

$ cat>5-node-exporter.yml<<EOF

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: node-exporter

name: node-exporter

namespace: monitoring

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

containers:

- name: node-exporter

image: 192.168.0.107/prometheus/node-exporter:v0.18.1

args:

- --web.listen-address=:9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.hwmon

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

resources:

limits:

cpu: 250m

memory: 180Mi

requests:

cpu: 102m

memory: 180Mi

ports:

- containerPort: 9100

volumeMounts:

- mountPath: /host/proc

name: proc

readOnly: false

- mountPath: /host/sys

name: sys

readOnly: false

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

hostNetwork: true

hostPID: true

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

tolerations:

- operator: Exists

volumes:

- hostPath:

path: /proc

name: proc

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: root

EOF

启动node-exporter

$ cd /opt/k8s/prometheus

$ kubectl create -f 5-node-exporter.yml

$ kubectl -n monitoring get pod | grep node

node-exporter-854vr 1/1 Running 6 50m

node-exporter-lv9pv 1/1 Running 0 50m

通过prometheus收集node-exporter的指标信息

因为集群的节点之后可能会动态扩容和缩减,所以不便采用静态配置的形式,Prometheus给我们提供了Kubernetes对应的服务发现功能,可以实现对Kubernetes的动态监控。其中对节点的监控利用node的服务发现方式,在prometheus的配置文件中追加如下配置(对应的2-prom-cnfig.yml也需要追加,否则Configmap重建后这些信息就丢掉了)

$ kubectl -n monitoring edit configmaps prom-config

- job_name: "kubernetes-nodes"

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: ‘(.*):10250‘

replacement: ‘${1}:9100‘

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

追加完成后执行如下命令,重新加载配置项,至于为何这样配置,后面章节配置原理具体讲解

$ curl -XPOST http://192.168.0.107:9090/-/reload

此时,prometheus就会尝试获取集群node信息,查看promethes的日志信息,会发现如下错误提示

level=error ts=2020-03-22T10:37:13.856Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:333: Failed to list *v1.Node: nodes is forbidden: User \"system:serviceaccount:monitoring:default\" cannot list resource \"nodes\" in API group \"\" at the cluster scope"

意思是用默认的serviceaccount不能 list *v1.Node,因此需要我们为Prometheus重新创建serviceaccount,并赋予相应的权限

创建prometheus对应的serviceaccount,并赋予相应的权限

$ cd /opt/k8s/prometheus

$ cat>6-prometheus-serivceaccount-role.yaml<<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus-k8s

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- apiGroups: [""]

resources:

- nodes/proxy

- nodes

- namespaces

- endpoints

- pods

- services

verbs: ["get","list","watch"]

- apiGroups: [""]

resources:

- nodes/metrics

verbs: ["get"]

- nonResourceURLs:

- /metrics

verbs: ["get"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus-k8s

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-k8s

subjects:

- kind: ServiceAccount

name: prometheus-k8s

namespace: monitoring

EOF

$ cd /opt/k8s/prometheus

$ kubectl create -f 6-prometheus-serivceaccount-role.yaml

修改Prometheus的启动yaml,追加serivceaccount配置,重启Prometheus

...

spec:

serviceAccountName: prometheus-k8s

containers:

- name: prometheus

...

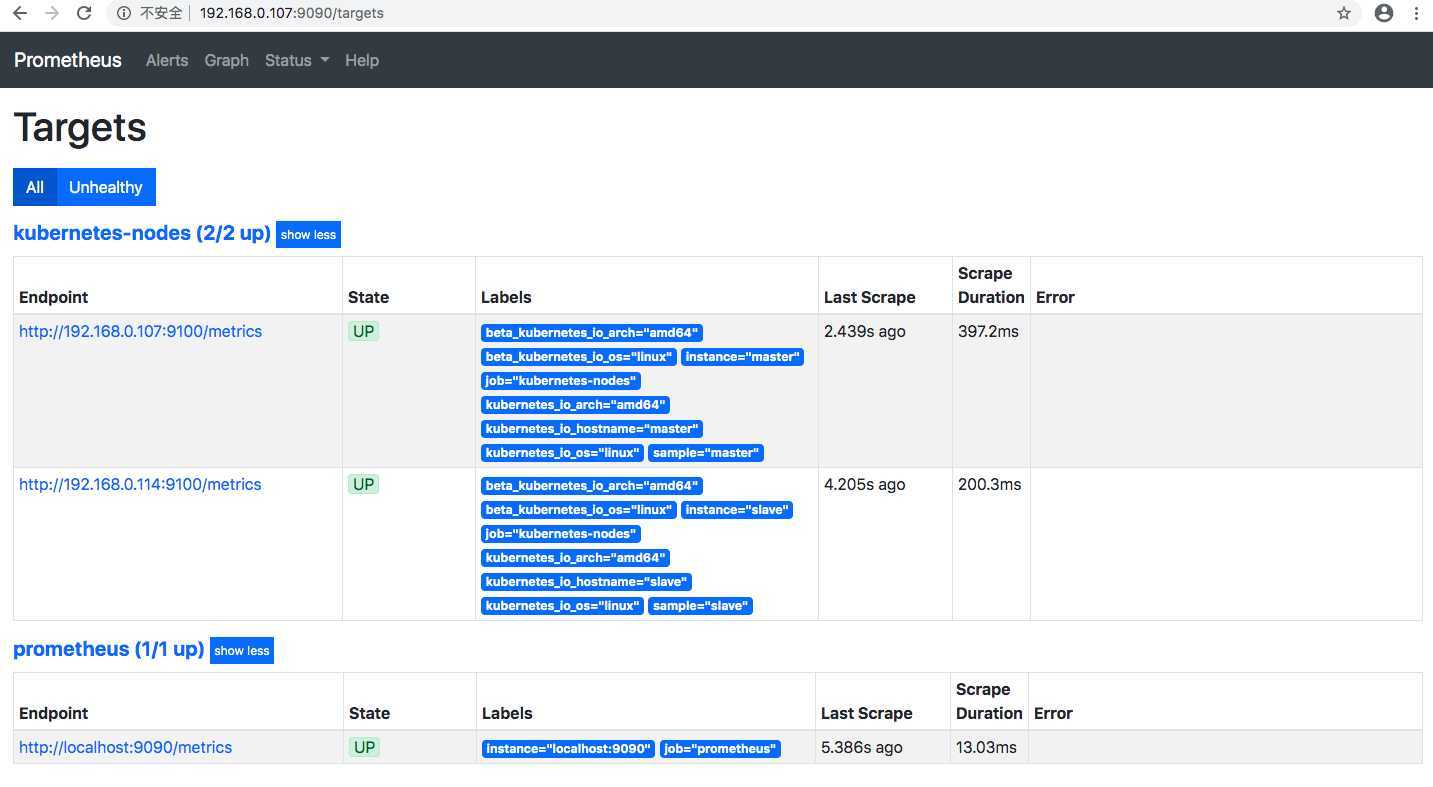

查看prometheus监控对象列表

目标地址查找

当kubernetes_sd_config配置的role是node时,prometheus启动后会调用kubernetes的 LIST Node API获取node相关信息,并从node对象中获取IP和PORT来构成监控地址。

其中IP获取按照如下顺序InternalIP, ExternalIP, LegacyHostIP, HostName查找

Port值默认采用Kubelet的HTTP port。

通过如下命令可查看 list Node接口返回的IP 和 Port信息

$ kubectl get node -o=jsonpath=‘{range .items[*]}{.status.addresses}{"\t"}{.status.daemonEndpoints}{"\n"}{end}‘

[map[address:192.168.0.107 type:InternalIP] map[address:master type:Hostname]] map[kubeletEndpoint:map[Port:10250]]

[map[address:192.168.0.114 type:InternalIP] map[address:slave type:Hostname]] map[kubeletEndpoint:map[Port:10250]]

上述返回结果表示集群中有两个节点,构成的taget地址分别是

192.168.0.107:10250

192.168.0.114:10250

relabe_configs

Relabeling可以让Prometheus在抓取数据之前动态的修改标签的值。Prometheus有许多默认标签,其中下面几个和我们处理有关

__address__:初始化时会设置成目标地址对应的<host>:<port>instance:__address__标签的值经过Relabel阶段后,会设置给标签 instance, 即instance是__address__标签经过Relabel后的值__scheme__ :默认值 http__metrics_path__ 默认值 /metricsPrometheus拉取指标信息的目的地址是把这几个标签连接起来__scheme__://instance/__metrics_path__

我们启动node-expeorter后,在各个节点的:9100/metrics上暴露了node指标信息,然后在prometheus中追加了如下配置段

- job_name: "kubernetes-nodes"

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: ‘(.*):10250‘

replacement: ‘${1}:9100‘

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

其中relabel_configs的第一个配置片段

- source_labels: [__address__]

regex: ‘(.*):10250‘

replacement: ‘${1}:9100‘

target_label: __address__

action: replace

__address__中匹配出IP地址${IP}:9100__address__的值替换成replacement,即 ${IP}:9100经过这些步骤后,拼接成的获取指标地址为[http://192.168.0.107:9100/metrics, http://192.168.0.114:9100/metrics],和我们的node-exporter暴露的指标地址匹配,就可以拉取Node的指标信息了

另外因为prometheus会将node对应的标签变成__meta_kubernetes_node_label_<labelname>,所以追加了一个labelmap的动作,再把这些标签名字还原出来

完整配置例子参考prometheus-kubernetes

kubelet会收集一些api server 、etcd等服务的指标信息,可以通过如下命令查看

$ kubectl get --raw https://192.168.0.107:10250/metrics

接下来通过在promehteus中追加配置,让promehteus拉取这些信息

- job_name: "kubernetes-kubelet"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

kubelet默认集成了cAdvisor,用来对集群中容器信息进行收集,Kubernetes 1.7.3以及之后的版本中,把cAdvisor收集的指标信息(以container_开头)从Kubelet对应的/metrics中移除了,所以需要额外配置一个收集cAdvisor的job。cAdvisor指标调用命令

$ kubectl get --raw https://192.168.0.107:6443/api/v1/nodes/master/proxy/metrics/cadvisor

接下来通过在promehteus中追加配置,让promehteus拉取这些信息

- job_name: "kubernetes-cadvisor"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

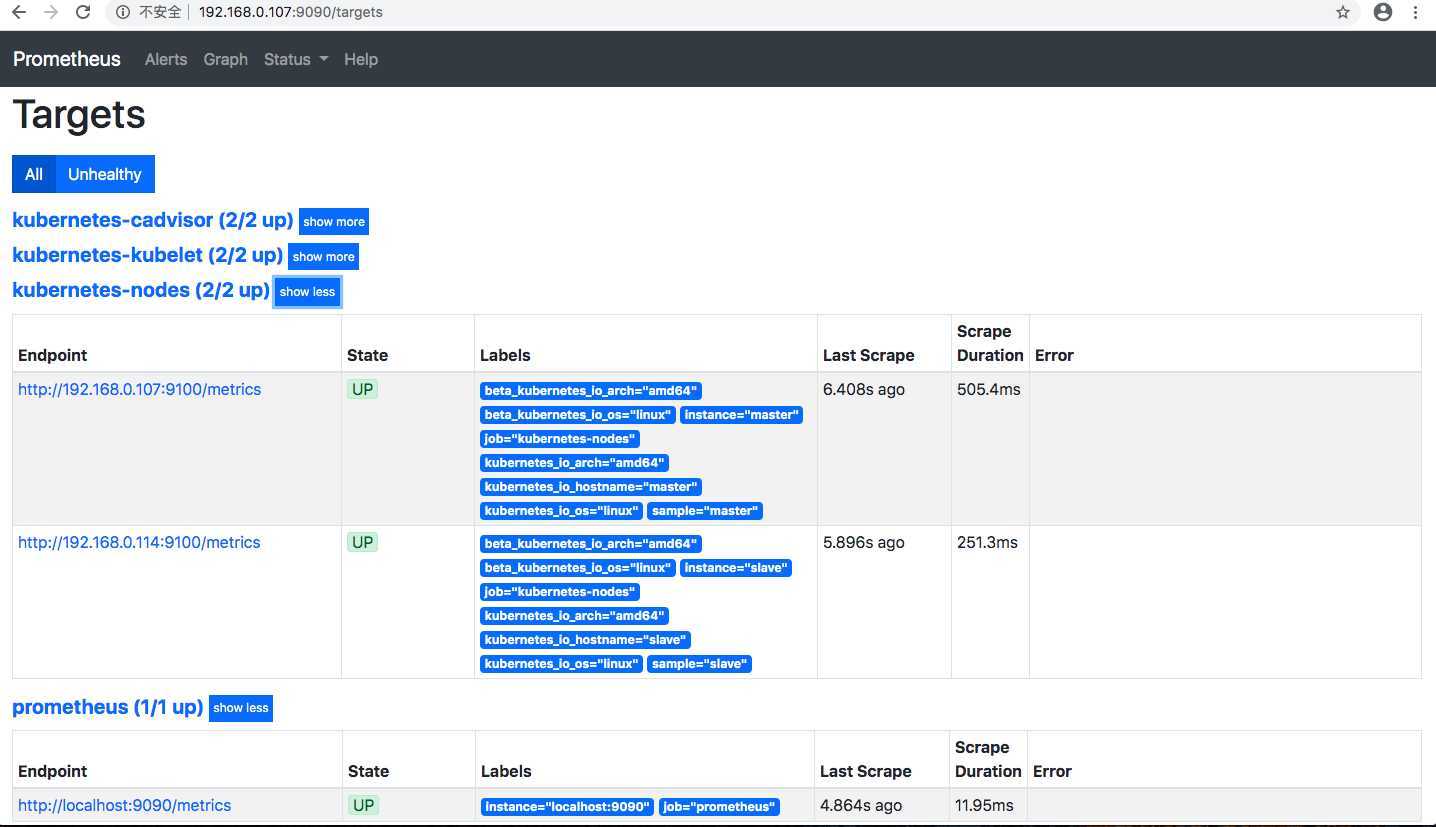

添加完成后重新load 配置文件,查看prometheus监控对象列表

完整的配置文件如下

$ cd /opt/k8s/prometheus

$ cat 2-prom-cnfig.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: prom-config

namespace: monitoring

data:

level=info ts=2020-03-22T12:15:02.551Z caller=head.go:625 component=tsdb msg="WAL segment loaded" segment=10 maxSegment=15

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: ‘prometheus‘

static_configs:

- targets: [‘localhost:9090‘]

- job_name: "kubernetes-nodes"

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: ‘(.*):10250‘

replacement: ‘${1}:9100‘

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: "kubernetes-kubelet"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: "kubernetes-cadvisor"

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

创建用来存储grafana数据的pv、pvc(采用本地存储、可以通过搭建NFS、GlusterFs等分布式文件系统代替)

$ cd /opt/k8s/prometheus

$ cat>7-grafana-pv.yml<<EOF

kind: PersistentVolume

apiVersion: v1

metadata:

namespace: monitoring

name: grafana

labels:

type: local

app: grafana

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /opt/k8s/prometheus/grafana-pvc

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: monitoring

name: grafana-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

EOF

grafana部署文件

$ cd /opt/k8s/prometheus

$ cat>8-grafana.yml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- image: grafana/grafana:6.6.2

name: grafana

ports:

- containerPort: 3000

name: http

readinessProbe:

httpGet:

path: /api/health

port: http

resources:

limits:

cpu: 200m

memory: 400Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-pvc

readOnly: false

subPath: data

- mountPath: /etc/grafana/provisioning/datasources

name: grafana-pvc

readOnly: false

subPath: datasources

- mountPath: /etc/grafana/provisioning/dashboards

name: grafana-pvc

readOnly: false

subPath: dashboards-pro

- mountPath: /grafana-dashboard-definitions/0

name: grafana-pvc

readOnly: false

subPath: dashboards

nodeSelector:

beta.kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

volumes:

- name: grafana-pvc

persistentVolumeClaim:

claimName: grafana-claim

---

apiVersion: v1

kind: Service

metadata:

namespace: monitoring

name: grafana

spec:

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 3000

selector:

app: grafana

EOF

启动grafana

创建grafana挂载目录

$ cd /opt/k8s/prometheus

$ mkdir -p grafana-pvc/data

$ mkdir -p grafana-pvc/datasources

$ mkdir -p grafana-pvc/dashboards-pro

$ mkdir -p grafana-pvc/dashboards

$ chmod -R 777 grafana-pvc

创建默认的数据源文件

$ cd /opt/k8s/prometheus/grafana-pvc/datasources

$ cat > datasource.yaml<<EOF

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

access: proxy

url: http://prometheus.monitoring.svc:9090

EOF

创建默认的dashboards管理文件

$ cd /opt/k8s/prometheus/grafana-pvc/dashboards-pro

$ cat >dashboards.yaml<<EOF

apiVersion: 1

providers:

- name: ‘0‘

orgId: 1

folder: ‘‘

type: file

editable: true

updateIntervalSeconds: 10

allowUiUpdates: false

options:

path: /grafana-dashboard-definitions/0

EOF

创建默认的dashboard定义文件

可以到 a collection of shared dashboards,找到自己需要的dashboard模版,下载对应的json文件将对应文件存放到/opt/k8s/prometheus/grafana-pvc/dashboards),这里作为示例,下载1 Node Exporter for Prometheus Dashboard CN v20191102,对应的ID是8919。

$ cd /opt/k8s/prometheus/grafana-pvc/dashboards

$ wget https://grafana.com/api/dashboards/8919/revisions/11/download -o node-exporter-k8s.json

因为模版中的数据源默认用的是${DS_PROMETHEUS_111},从界面导入时有配置项供替换,我们直接下载json文件,所以通过直接修改文件,把数据源改成我们在/opt/k8s/prometheus/grafana-pvc/datasources下配置的数据源

$ cd /opt/k8s/prometheus/grafana-pvc/dashboards

$ sed -i "s/\${DS_PROMETHEUS_111}/Prometheus/g" node-exporter-k8s.json

修改title

...

"timezone": "browser",

"title": "k8s-node-monitoring",

...

启动

$ cd /opt/k8s/prometheus/

$ kubectl create -f 7-grafana-pv.yml 8-grafana.yml

通过界面查看,因为我们已经默认设置过数据源、dashboard等信息,可以直接查看对应的dashboard

在部署grafana时,我们配置了默认的数据源、dashboard等信息,主要是为了实现,系统部署后这些默认监控指标可以直接观察,不需要实施人员现场配置。

其他的监控例如使用 Kube-state-metrics以及cAdvisor metrics实现对集群中Deployment、StatefulSet、容器、pod的监控也可以采用这种形式来实现。如可以利用1. Kubernetes Deployment Statefulset Daemonset metrics作为模版,稍微修改满足我们的监控需要,这里就不再展示具体步骤,读者可以自行尝试。

原文:https://www.cnblogs.com/gaofeng-henu/p/12555116.html